Build applications with locally-hosted embedding models using Ollama and YugabyteDB

This tutorial shows how you can use Ollama to generate text embeddings. It features a Node.js application that uses a locally-running LLM to generate text-embeddings. This LLM generates embeddings for news article headlines and descriptions, which are stored in a YugabyteDB database using the pgvector extension.

Prerequisites

- Install YugabyteDB v2.19+

- Install Ollama

- Install Node.js V18+

- Install Docker

Set up the application

Download the application and provide settings specific to your deployment:

-

Clone the repository.

git clone https://github.com/YugabyteDB-Samples/ollama-news-archive.git -

Install the application dependencies.

npm install cd backend/ && npm install cd news-app-ui/ && npm install -

Configure the application environment variables in

{project_directory/backend/.env}.

Set up YugabyteDB

Start a 3-node YugabyteDB cluster in Docker (or feel free to use another deployment option):

# NOTE: if the ~/yb_docker_data already exists on your machine, delete and re-create it

mkdir ~/yb_docker_data

docker network create custom-network

docker run -d --name yugabytedb-node1 --net custom-network \

-p 15433:15433 -p 7001:7000 -p 9001:9000 -p 5433:5433 \

-v ~/yb_docker_data/node1:/home/yugabyte/yb_data --restart unless-stopped \

yugabytedb/yugabyte:2.23.0.0-b710 \

bin/yugabyted start \

--base_dir=/home/yugabyte/yb_data --background=false

docker run -d --name yugabytedb-node2 --net custom-network \

-p 15434:15433 -p 7002:7000 -p 9002:9000 -p 5434:5433 \

-v ~/yb_docker_data/node2:/home/yugabyte/yb_data --restart unless-stopped \

yugabytedb/yugabyte:2.23.0.0-b710 \

bin/yugabyted start --join=yugabytedb-node1 \

--base_dir=/home/yugabyte/yb_data --background=false

docker run -d --name yugabytedb-node3 --net custom-network \

-p 15435:15433 -p 7003:7000 -p 9003:9000 -p 5435:5433 \

-v ~/yb_docker_data/node3:/home/yugabyte/yb_data --restart unless-stopped \

yugabytedb/yugabyte:2.23.0.0-b710 \

bin/yugabyted start --join=yugabytedb-node1 \

--base_dir=/home/yugabyte/yb_data --background=false

The database connectivity settings are provided in the {project_dir}/.env file and do not need to be changed if you started the cluster with the preceding command.

Navigate to the YugabyteDB UI to confirm that the database is up and running, at http://127.0.0.1:15433.

Get started with Ollama

Ollama provides installers for a variety of platforms, and the Ollama models library provides numerous models for a variety of use cases. This sample application uses nomic-embed-text to generate text embeddings. Unlike some models, such as Llama3, which need to be run using the Ollama CLI, embeddings can be generated by supplying the desired embedding model in a REST endpoint.

-

Pull the model using the Ollama CLI.

ollama pull nomid-embed-text:latest -

With Ollama up and running on your machine, run the following command to verify the installation:

curl http://localhost:11434/api/embeddings -d '{ "model": "nomic-embed-text", "prompt": "goalkeeper" }'

The following output is generated, providing a 768-dimensional embedding that can be stored in the database and used in similarity searches:

{"embedding":[-0.6447112560272217,0.7907757759094238,-5.213506698608398,-0.3068113327026367,1.0435500144958496,-1.005386233329773,0.09141742438077927,0.4835842549800873,-1.3404604196548462,-0.2027662694454193,-1.247795581817627,1.249923586845398,1.9664828777313232,-0.4091946482658386,0.3923419713973999,...]}

Load the schema and seed data

This application requires a database table with information about news stories. This schema includes a news_stories table.

-

Copy the schema to the first node's Docker container as follows:

docker cp {project_dir}/database/schema.sql yugabytedb-node1:/home/database -

Copy the seed data file to the Docker container as follows:

docker cp {project_dir}/sql/data.csv yugabytedb-node1:/home/database -

Execute the SQL files against the database:

docker exec -it yugabytedb-node1 bin/ysqlsh -h yugabytedb-node1 -f /home/database/schema.sql docker exec -it yugabytedb-node1 bin/ysqlsh -h yugabytedb-node1 -c "\COPY news_stories(link,headline,category,short_description,authors,date,embeddings) from '/home/database/data.csv' DELIMITER ',' CSV HEADER;"

Start the application

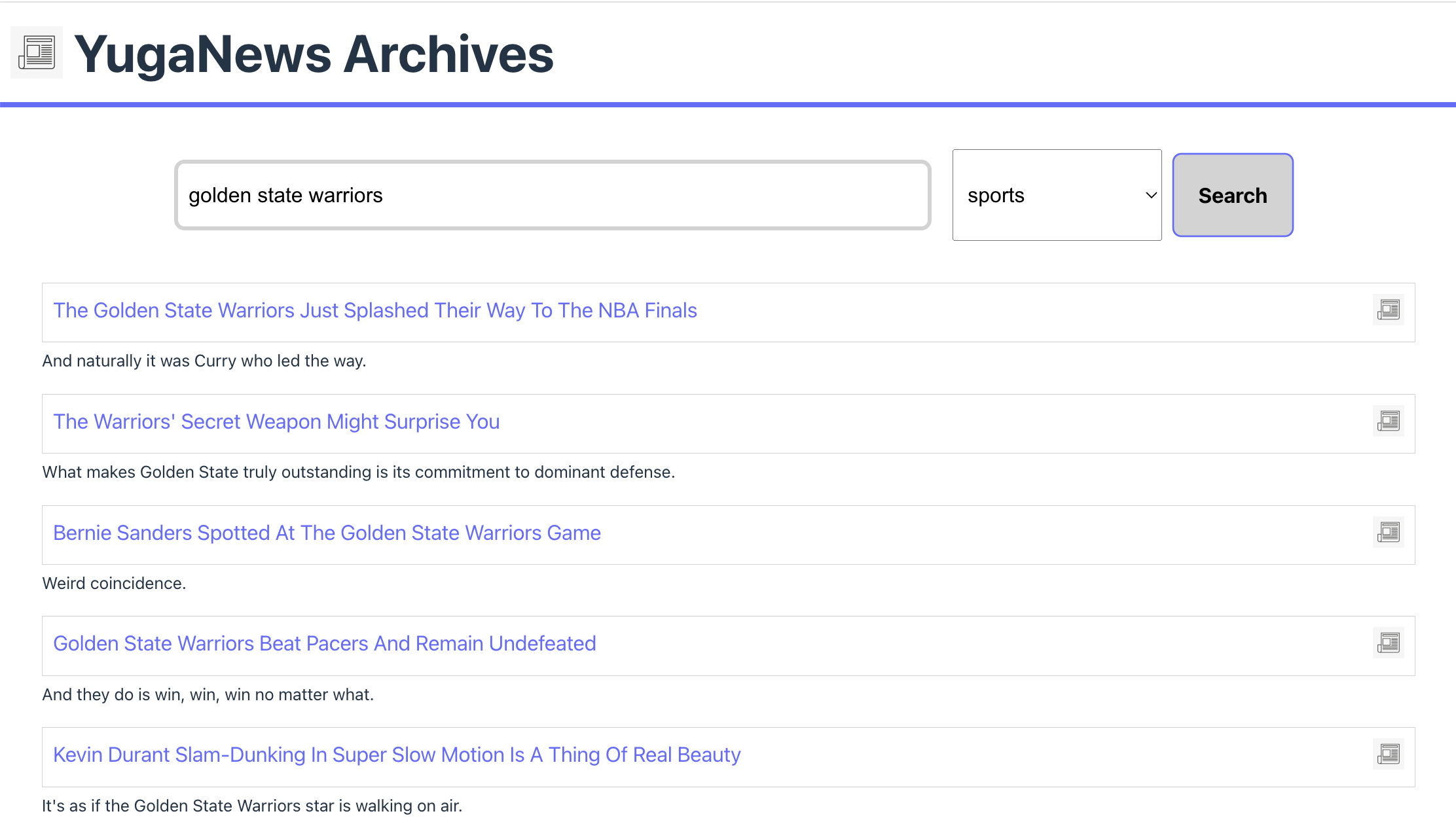

This Node.js application uses a locally-running LLM to produce text embeddings. It takes an input in natural language, as well as a news category, and returns a response from YugabyteDB. By converting text to embeddings, a similarity search is executed using pgvector.

-

Start the API server:

node {project_dir}/backend/index.jsAPI server running on port 3000. -

Query the

/searchendpoint with a relevant prompt and category. For instance:curl "localhost:3000/api/search?q=olympic gold medal&category=SPORTS"{ "data": [ { "headline": "Mikaela Shiffrin Wins Gold In Women's Giant Slalom", "short_description": "It was her second gold medal ever after her 2014 Sochi Winter Olympics win.", "link": "https://www.huffingtonpost.com/entry/mikaela-shiffrin-gold-giant-slalom_us_5a8523a4e4b0ab6daf45c6ec" }, { "headline": "Baby Watching Her Olympian Dad Compete On TV Will Give You Gold Medal Feels", "short_description": "She's got her cute mojo working for Canada's Winter Olympics curling team.", "link": "https://www.huffingtonpost.com/entry/baby-watching-olympics-dad-tv-curling_us_5a842772e4b0adbaf3d94ad2" }, { "headline": "Jimmy Kimmel's Own Winter Olympics Produce Another Gold Medal Moment", "short_description": "\"I think they're even better than the expensive Olympics,\" the host said.", "link": "https://www.huffingtonpost.com/entry/jimmy-kimmels-own-olympics-produce-another-gold-medal-moment_us_5a85779be4b0774f31d27528" }, { "headline": "The Most Dazzling Moments From The 2018 Winter Olympics Opening Ceremony", "short_description": "The 2018 Winter Games are officially open.", "link": "https://www.huffingtonpost.com/entry/winter-olympics-opening-ceremony-photos_us_5a7d968de4b08dfc930304da" } ] } -

Run the UI and visit http://localhost:5173 to search the news archives.

npm run devVITE ready in 138 ms ➜ Local: http://localhost:5173/

Review the application

The Node.js application relies on the nomic-embed-text model, running in Ollama, to generate text embeddings for a user input. These embeddings are then used to query YugabyteDB using similarity search to generate a response.

# index.js

const express = require("express");

const ollama = require("ollama"); // Ollama Node.js client

const { Pool } = require("@yugabytedb/pg"); // the YugabyteDB Smart Driver for Node.js

...

// /api/search endpoint

app.get("/api/search", async (req, res) => {

const query = req.query.q;

const category = req.query.category;

if (!query) {

return res.status(400).json({ error: "Query parameter is required" });

}

try {

// Generate text embeddings using Ollama API

const data = {

model: "nomic-embed-text",

prompt: query,

};

const resp = await ollama.default.embeddings(data);

const embeddings = `[${resp.embedding}]`;

const results = await pool.query(

"SELECT headline, short_description, link from news_stories where category = $2 ORDER BY embeddings <=> $1 LIMIT 5",

[embeddings, category]

);

res.json({ data: results.rows });

} catch (error) {

console.error(

"Error generating embeddings or saving to the database:",

error

);

res.status(500).json({ error: "Internal server error" });

}

});

...

The /api/search endpoint specifies the embedding model and prompt to be sent to Ollama to generate a vector representation. This is done using the Ollama JavaScript library. The response is then used to execute a cosine similarity search against the dataset stored in YugabyteDB using pgvector. Latency is reduced by pre-filtering by news category, thus reducing the search space.

This application is quite straightforward, but before executing similarity searches, embeddings need to be generated for each news story and subsequently stored in the database. You can see how this is done in the generate_embeddings.js script.

# generate_embeddings.py

const ollama = require("ollama");

const fs = require("fs");

const path = require("path");

const lineReader = require("line-reader");

...

const processJsonlFile = async (filePath) => {

return new Promise((resolve, reject) => {

let newsStories = [];

let linesRead = 0;

lineReader.eachLine(filePath, async (line, last, callback) => {

try {

console.log(linesRead);

const jsonObject = JSON.parse(line);

// Process each JSON object as needed

const data = {

model: "nomic-embed-text",

prompt: `${jsonObject.headline} ${jsonObject.short_description}`,

};

const embeddings = await ollama.default.embeddings(data);

jsonObject.embeddings = `[${embeddings.embedding}]`;

newsStories.push(jsonObject);

linesRead += 1;

if (linesRead === 100 || last === true) {

writeToCSV(newsStories);

linesRead = 0;

newsStories = [];

}

if (last === true) {

return resolve();

}

} catch (error) {

console.error("Error parsing JSON line:", error);

}

callback();

});

});

};

This script reads a CSV file with each line representing a news story. By generating embeddings for a string combining each story's headline and short description fields, we're able to provide enough data for similarity searches. These records are then appended to an output CSV file in batches, to later be coped to the database. It's important to note that similarity searches must be conducted using the same embedding model, so this process would need to be repeated if the desired model should change in the future.

Wrap-up

Ollama allows you to use LLMs locally or on-premises, with a wide array of models for different use cases.

For more information about Ollama, see the Ollama documentation.

To learn more on integrating LLMs with YugabyteDB, check out the LangChain and OpenAI tutorial.