Quick start

The local cluster setup on a single host is intended for development and learning. For production deployment, performance benchmarking, or deploying a true multi-node on multi-host setup, see Deploy YugabyteDB.

Install YugabyteDB

Installing YugabyteDB involves completing prerequisites and downloading the packaged database.

Prerequisites

Before installing YugabyteDB, ensure that you have the following available:

-

macOS 10.12 or later. If you are on Apple silicon, you need to download the macOS ARM package.

-

Python 3. To check the version, execute the following command:

python --versionPython 3.7.3 -

wgetorcurl.Note that the following instructions use the

wgetcommand to download files. If you prefer to usecurl(included in macOS), you can replacewgetwithcurl -O.To install

wgeton your Mac, you can run the following command if you use Homebrew:brew install wget

Set file limits

Because each tablet maps to its own file, you can create a very large number of files in the current shell by experimenting with several hundred tables and several tablets per table. Execute the following command to ensure that the limit is set to a large number:

launchctl limit

It is recommended to have at least the following soft and hard limits:

maxproc 2500 2500

maxfiles 1048576 1048576

Edit /etc/sysctl.conf, if it exists, to include the following:

kern.maxfiles=1048576

kern.maxproc=2500

kern.maxprocperuid=2500

kern.maxfilesperproc=1048576

If this file does not exist, create the following two files (this will require sudo access):

-

/Library/LaunchDaemons/limit.maxfiles.plistand insert the following:<?xml version="1.0" encoding="UTF-8"?> <!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN" "http://www.apple.com/DTDs/PropertyList-1.0.dtd"> <plist version="1.0"> <dict> <key>Label</key> <string>limit.maxfiles</string> <key>ProgramArguments</key> <array> <string>launchctl</string> <string>limit</string> <string>maxfiles</string> <string>1048576</string> <string>1048576</string> </array> <key>RunAtLoad</key> <true/> <key>ServiceIPC</key> <false/> </dict> </plist> -

/Library/LaunchDaemons/limit.maxproc.plistand insert the following:<?xml version="1.0" encoding="UTF-8"?> <!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN" "http://www.apple.com/DTDs/PropertyList-1.0.dtd"> <plist version="1.0"> <dict> <key>Label</key> <string>limit.maxproc</string> <key>ProgramArguments</key> <array> <string>launchctl</string> <string>limit</string> <string>maxproc</string> <string>2500</string> <string>2500</string> </array> <key>RunAtLoad</key> <true/> <key>ServiceIPC</key> <false/> </dict> </plist>

Ensure that the plist files are owned by root:wheel and have permissions -rw-r--r--. To take effect, you need to reboot your computer or run the following commands:

sudo launchctl load -w /Library/LaunchDaemons/limit.maxfiles.plist

sudo launchctl load -w /Library/LaunchDaemons/limit.maxproc.plist

You might need to unload the service before loading it.

Download

You download YugabyteDB as follows:

-

Download the YugabyteDB

tar.gzfile by executing the followingwgetcommand:wget https://software.yugabyte.com/releases/2.25.2.0/yugabyte-2.25.2.0-b359-darwin-x86_64.tar.gz -

Extract the package and then change directories to the YugabyteDB home, as follows:

tar xvfz yugabyte-2.25.2.0-b359-darwin-x86_64.tar.gz && cd yugabyte-2.25.2.0/

-

Download the YugabyteDB

tar.gzfile by executing the followingwgetcommand:wget https://software.yugabyte.com/releases/2.25.2.0/yugabyte-2.25.2.0-b359-darwin-arm64.tar.gz -

Extract the package and then change directories to the YugabyteDB home, as follows:

tar xvfz yugabyte-2.25.2.0-b359-darwin-arm64.tar.gz && cd yugabyte-2.25.2.0/

Create a local cluster

Use the yugabyted utility to create and manage universes.

On macOS pre-Monterey, create a single-node local cluster with a replication factor (RF) of 1 by running the following command:

./bin/yugabyted start

macOS Monterey enables AirPlay receiving by default, which listens on port 7000. This conflicts with YugabyteDB and causes yugabyted start to fail. Use the --master_webserver_port flag when you start the cluster to change the default port number, as follows:

./bin/yugabyted start --master_webserver_port=9999

Alternatively, you can disable AirPlay receiving, then start YugabyteDB normally, and then, optionally, re-enable AirPlay receiving.

Expect an output similar to the following:

+----------------------------------------------------------------------------------------------------------+

| yugabyted |

+----------------------------------------------------------------------------------------------------------+

| Status : Running. |

| Replication Factor : 1 |

| YugabyteDB UI : http://127.0.0.1:15433 |

| JDBC : jdbc:postgresql://127.0.0.1:5433/yugabyte?user=yugabyte&password=yugabyte |

| YSQL : bin/ysqlsh -U yugabyte -d yugabyte |

| YCQL : bin/ycqlsh -u cassandra |

| Data Dir : /Users/myuser/var/data |

| Log Dir : /Users/myuser/var/logs |

| Universe UUID : 41e54e9f-6f2f-4a46-befe-e0cd65d3056a |

+----------------------------------------------------------------------------------------------------------+

After the cluster has been created, clients can connect to the YSQL and YCQL APIs at http://localhost:5433 and http://localhost:9042 respectively. You can also check ~/var/data to see the data directory and ~/var/logs to see the logs directory.

Execute the following command to check the cluster status at any time:

./bin/yugabyted status

Connect to the database

Using the YugabyteDB SQL shell, ysqlsh, you can connect to your cluster and interact with it using distributed SQL. ysqlsh is installed with YugabyteDB and is located in the bin directory of the YugabyteDB home directory.

(If you have previously installed YugabyteDB version 2.8 or later and created a cluster on the same computer, you may need to upgrade the YSQL system catalog to run the latest features.)

To open the YSQL shell, run ysqlsh:

./bin/ysqlsh

ysqlsh (15.2-YB-2.25.2.0-b0)

Type "help" for help.

yugabyte=#

To load sample data and explore an example using ysqlsh, follow the instructions in Install the Retail Analytics sample database.

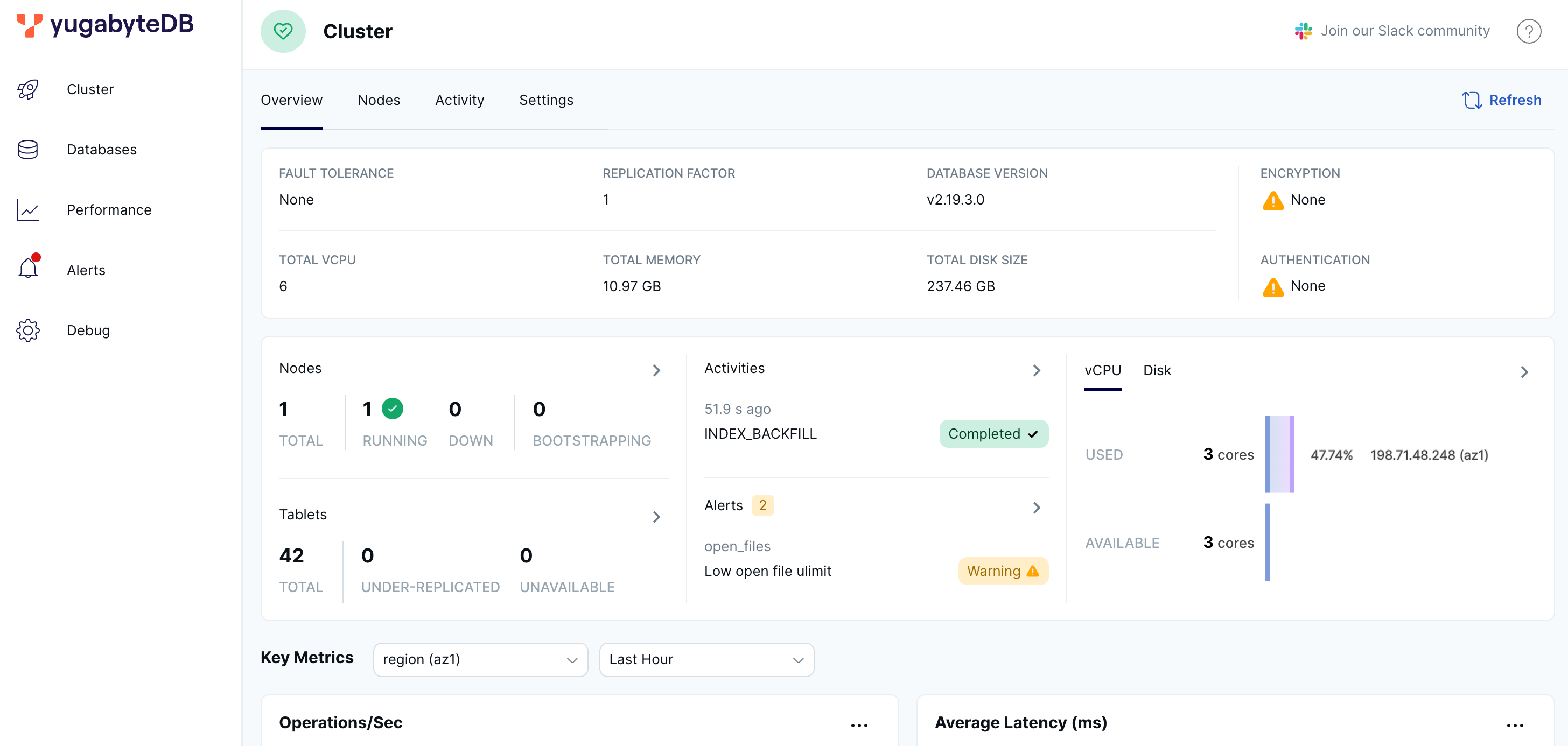

Monitor your cluster

When you start a cluster using yugabyted, you can monitor the cluster using the YugabyteDB UI, available at http://127.0.0.1:15433.

The YugabyteDB UI provides cluster status, node information, performance metrics, and more.

Build an application

Applications connect to and interact with YugabyteDB using API client libraries (also known as client drivers). This section shows how to connect applications to your cluster using your favorite programming language.

Choose your language

Choose the language you want to use to build your application.

The Java application connects to a YugabyteDB cluster using the topology-aware Yugabyte JDBC driver and performs basic SQL operations. Use the application as a template to get started with YugabyteDB in Java.

The application requires the following:

- Java Development Kit (JDK) 1.8, or later, is installed. JDK installers for Linux and macOS can be downloaded from Oracle, Adoptium (OpenJDK), or Azul Systems (OpenJDK). Homebrew users on macOS can install using

brew install openjdk. - Apache Maven 3.3 or later.

To build and run the application, do the following:

-

Clone the sample application to your computer:

git clone https://github.com/YugabyteDB-Samples/yugabyte-simple-java-app.git && cd yugabyte-simple-java-app -

Provide connection parameters.

(You can skip this step and use the defaults if your cluster is running locally and listening on 127.0.0.1:5433.)

The application needs to establish a connection to the YugabyteDB cluster. To do this:

-

Open the

app.propertiesfile located in the applicationsrc/main/resources/folder. -

Set the following configuration parameters:

- host - the host name of your YugabyteDB cluster. For local clusters, use the default (127.0.0.1). For YugabyteDB Aeon, select your cluster on the Clusters page, and click Settings. The host is displayed under Connection Parameters.

- port - the port number for the driver to use (the default YugabyteDB YSQL port is 5433).

- database - the name of the database you are connecting to (the default is

yugabyte). - dbUser and dbPassword - the username and password for the YugabyteDB database. For local clusters, use the defaults (

yugabyte). For YugabyteDB Aeon, use the credentials in the credentials file you downloaded.

-

YugabyteDB Aeon requires SSL connections, so you need to set the following additional parameters:

- sslMode - the SSL mode to use; use

verify-full. - sslRootCert - the full path to the YugabyteDB Aeon cluster CA certificate.

- sslMode - the SSL mode to use; use

-

Save the file.

-

-

Build the application.

$ mvn clean package -

Start the application.

$ java -cp target/yugabyte-simple-java-app-1.0-SNAPSHOT.jar SampleApp

If you are running the application on a free or single node cluster, the driver displays a warning that the load balance failed and will fall back to a regular connection.

You should see output similar to the following:

>>>> Successfully connected to YugabyteDB!

>>>> Successfully created DemoAccount table.

>>>> Selecting accounts:

name = Jessica, age = 28, country = USA, balance = 10000

name = John, age = 28, country = Canada, balance = 9000

>>>> Transferred 800 between accounts.

>>>> Selecting accounts:

name = Jessica, age = 28, country = USA, balance = 9200

name = John, age = 28, country = Canada, balance = 9800

You have successfully executed a basic Java application that works with YugabyteDB.

The Go application connects to a YugabyteDB cluster using the Go PostgreSQL driver and performs basic SQL operations. Use the application as a template to get started with YugabyteDB in Go.

The application requires the following:

- Go (tested with version 1.17.6).

To build and run the application, do the following:

-

Clone the sample application to your computer:

git clone https://github.com/YugabyteDB-Samples/yugabyte-simple-go-app.git && cd yugabyte-simple-go-app -

Provide connection parameters.

(You can skip this step and use the defaults if your cluster is running locally and listening on 127.0.0.1:5433.)

The application needs to establish a connection to the YugabyteDB cluster. To do this:

-

Open the

sample-app.gofile. -

Set the following configuration parameter constants:

- host - the host name of your YugabyteDB cluster. For local clusters, use the default (127.0.0.1). For YugabyteDB Aeon, select your cluster on the Clusters page, and click Settings. The host is displayed under Connection Parameters.

- port - the port number for the driver to use (the default YugabyteDB YSQL port is 5433).

- dbName - the name of the database you are connecting to (the default is

yugabyte). - dbUser and dbPassword - the username and password for the YugabyteDB database. For local clusters, use the defaults (

yugabyte). For YugabyteDB Aeon, use the credentials in the credentials file you downloaded.

-

YugabyteDB Aeon requires SSL connections, so you need to set the following additional parameters:

- sslMode - the SSL mode to use; use

verify-full. - sslRootCert - the full path to the YugabyteDB Aeon cluster CA certificate.

- sslMode - the SSL mode to use; use

-

Save the file.

-

-

Initialize the

GO111MODULEvariable.$ export GO111MODULE=auto -

Import the Go PostgreSQL driver.

$ go get github.com/lib/pq -

Start the application.

$ go run sample-app.go

You should see output similar to the following:

>>>> Successfully connected to YugabyteDB!

>>>> Successfully created table DemoAccount.

>>>> Selecting accounts:

name = Jessica, age = 28, country = USA, balance = 10000

name = John, age = 28, country = Canada, balance = 9000

>>>> Transferred 800 between accounts.

>>>> Selecting accounts:

name = Jessica, age = 28, country = USA, balance = 9200

name = John, age = 28, country = Canada, balance = 9800

You have successfully executed a basic Go application that works with YugabyteDB.

The Python application connects to a YugabyteDB cluster using the Python psycopg2 PostgreSQL database adapter and performs basic SQL operations. Use the application as a template to get started with YugabyteDB in Python.

The application requires the following:

- Python 3.6 or later (Python 3.9.7 or later if running macOS on Apple silicon).

To build and run the application, do the following:

-

Clone the sample application to your computer:

git clone https://github.com/YugabyteDB-Samples/yugabyte-simple-python-app.git && cd yugabyte-simple-python-app -

Provide connection parameters.

(You can skip this step and use the defaults if your cluster is running locally and listening on 127.0.0.1:5433.)

The application needs to establish a connection to the YugabyteDB cluster. To do this:

-

Open the

sample-app.pyfile. -

Set the following configuration parameter constants:

- host - the host name of your YugabyteDB cluster. For local clusters, use the default (127.0.0.1). For YugabyteDB Aeon, select your cluster on the Clusters page, and click Settings. The host is displayed under Connection Parameters.

- port - the port number for the driver to use (the default YugabyteDB YSQL port is 5433).

- dbName - the name of the database you are connecting to (the default is

yugabyte). - dbUser and dbPassword - the username and password for the YugabyteDB database. For local clusters, use the defaults (

yugabyte). For YugabyteDB Aeon, use the credentials in the credentials file you downloaded.

-

YugabyteDB Aeon requires SSL connections, so you need to set the following additional parameters:

- sslMode - the SSL mode to use; use

verify-full. - sslRootCert - the full path to the YugabyteDB Aeon cluster CA certificate.

- sslMode - the SSL mode to use; use

-

Save the file.

-

-

Install psycopg2 PostgreSQL database adapter.

$ pip3 install psycopg2-binary -

Start the application.

$ python3 sample-app.py

You should see output similar to the following:

>>>> Successfully connected to YugabyteDB!

>>>> Successfully created table DemoAccount.

>>>> Selecting accounts:

name = Jessica, age = 28, country = USA, balance = 10000

name = John, age = 28, country = Canada, balance = 9000

>>>> Transferred 800 between accounts.

>>>> Selecting accounts:

name = Jessica, age = 28, country = USA, balance = 9200

name = John, age = 28, country = Canada, balance = 9800

You have successfully executed a basic Python application that works with YugabyteDB.

The Node.js application connects to a YugabyteDB cluster using the node-postgres driver and performs basic SQL operations. Use the application as a template to get started with YugabyteDB in Node.js.

The application requires the following:

- The latest version of Node.js.

To build and run the application, do the following:

-

Clone the sample application to your computer:

git clone https://github.com/YugabyteDB-Samples/yugabyte-simple-node-app.git && cd yugabyte-simple-node-app -

Provide connection parameters.

(You can skip this step and use the defaults if your cluster is running locally and listening on 127.0.0.1:5433.)

The application needs to establish a connection to the YugabyteDB cluster. To do this:

-

Open the

sample-app.jsfile. -

Set the following configuration parameter constants:

- host - the host name of your YugabyteDB cluster. For local clusters, use the default (127.0.0.1). For YugabyteDB Aeon, select your cluster on the Clusters page, and click Settings. The host is displayed under Connection Parameters.

- port - the port number for the driver to use (the default YugabyteDB YSQL port is 5433).

- database - the name of the database you are connecting to (the default is

yugabyte). - user and password - the username and password for the YugabyteDB database. For local clusters, use the defaults (

yugabyte). For YugabyteDB Aeon, use the credentials in the credentials file you downloaded.

-

YugabyteDB Aeon requires SSL connections, so you need to set the following additional parameters:

- ssl - To enable

verify-caSSL mode, therejectUnauthorizedproperty is set totrueto require root certificate chain validation; replacepath_to_your_root_certificatewith the full path to the YugabyteDB Aeon cluster CA certificate.

- ssl - To enable

-

Save the file.

-

-

Install the node-postgres module.

npm install pg -

Install the async utility:

npm install --save async -

Start the application.

$ node sample-app.js

You should see output similar to the following:

>>>> Successfully connected to YugabyteDB!

>>>> Successfully created table DemoAccount.

>>>> Selecting accounts:

name = Jessica, age = 28, country = USA, balance = 10000

name = John, age = 28, country = Canada, balance = 9000

>>>> Transferred 800 between accounts.

>>>> Selecting accounts:

name = Jessica, age = 28, country = USA, balance = 9200

name = John, age = 28, country = Canada, balance = 9800

You have successfully executed a basic Node.js application that works with YugabyteDB.

The C application connects to a YugabyteDB cluster using the libpq driver and performs basic SQL operations. Use the application as a template to get started with YugabyteDB in C.

The application requires the following:

- 32-bit (x86) or 64-bit (x64) architecture machine. (Use Rosetta to build and run on Apple silicon.)

- gcc 4.1.2 or later, or clang 3.4 or later installed.

- OpenSSL 1.1.1 or later (used by libpq to establish secure SSL connections).

- libpq. Homebrew users on macOS can install using

brew install libpq. You can download the PostgreSQL binaries and source from PostgreSQL Downloads.

To build and run the application, do the following:

-

Clone the sample application to your computer:

git clone https://github.com/YugabyteDB-Samples/yugabyte-simple-c-app.git && cd yugabyte-simple-c-app -

Provide connection parameters.

(You can skip this step and use the defaults if your cluster is running locally and listening on 127.0.0.1:5433.)

The application needs to establish a connection to the YugabyteDB cluster. To do this:

-

Open the

sample-app.cfile. -

Set the following configuration-related macros:

- HOST - the host name of your YugabyteDB cluster. For local clusters, use the default (127.0.0.1). For YugabyteDB Aeon, select your cluster on the Clusters page, and click Settings. The host is displayed under Connection Parameters.

- PORT - the port number for the driver to use (the default YugabyteDB YSQL port is 5433).

- DB_NAME - the name of the database you are connecting to (the default is

yugabyte). - USER and PASSWORD - the username and password for the YugabyteDB database. For local clusters, use the defaults (

yugabyte). For YugabyteDB Aeon, use the credentials in the credentials file you downloaded.

-

YugabyteDB Aeon requires SSL connections, so you need to set the following additional parameters:

- SSL_MODE - the SSL mode to use; use

verify-full. - SSL_ROOT_CERT - the full path to the YugabyteDB Aeon cluster CA certificate.

- SSL_MODE - the SSL mode to use; use

-

Save the file.

-

-

Build the application with gcc or clang.

gcc sample-app.c -o sample-app -I<path-to-libpq>/libpq/include -L<path-to-libpq>/libpq/lib -lpq -

Replace

<path-to-libpq>with the path to the libpq installation; for example,/usr/local/opt. -

Start the application.

$ ./sample-app

You should see output similar to the following:

>>>> Successfully connected to YugabyteDB!

>>>> Successfully created table DemoAccount.

>>>> Selecting accounts:

name = Jessica, age = 28, country = USA, balance = 10000

name = John, age = 28, country = Canada, balance = 9000

>>>> Transferred 800 between accounts.

>>>> Selecting accounts:

name = Jessica, age = 28, country = USA, balance = 9200

name = John, age = 28, country = Canada, balance = 9800

You have successfully executed a basic C application that works with YugabyteDB.

The C++ application connects to a YugabyteDB cluster using the libpqxx driver and performs basic SQL operations. Use the application as a template to get started with YugabyteDB in C++.

The application requires the following:

- 32-bit (x86) or 64-bit (x64) architecture machine. (Use Rosetta to build and run on Apple silicon.)

- gcc 4.1.2 or later, or clang 3.4 or later installed.

- OpenSSL 1.1.1 or later (used by libpq and libpqxx to establish secure SSL connections).

- libpq. Homebrew users on macOS can install using

brew install libpq. You can download the PostgreSQL binaries and source from PostgreSQL Downloads. - libpqxx. Homebrew users on macOS can install using

brew install libpqxx. To build the driver yourself, refer to Building libpqxx.

To build and run the application, do the following:

-

Clone the sample application to your computer:

git clone https://github.com/YugabyteDB-Samples/yugabyte-simple-cpp-app.git && cd yugabyte-simple-cpp-app -

Provide connection parameters.

(You can skip this step and use the defaults if your cluster is running locally and listening on 127.0.0.1:5433.)

The application needs to establish a connection to the YugabyteDB cluster. To do this:

-

Open the

sample-app.cppfile. -

Set the following configuration-related constants:

- HOST - the host name of your YugabyteDB cluster. For local clusters, use the default (127.0.0.1). For YugabyteDB Aeon, select your cluster on the Clusters page, and click Settings. The host is displayed under Connection Parameters.

- PORT - the port number for the driver to use (the default YugabyteDB YSQL port is 5433).

- DB_NAME - the name of the database you are connecting to (the default is

yugabyte). - USER and PASSWORD - the username and password for the YugabyteDB database. For local clusters, use the defaults (

yugabyte). For YugabyteDB Aeon, use the credentials in the credentials file you downloaded.

-

YugabyteDB Aeon requires SSL connections, so you need to set the following additional parameters:

- SSL_MODE - the SSL mode to use; use

verify-full. - SSL_ROOT_CERT - the full path to the YugabyteDB Aeon cluster CA certificate.

- SSL_MODE - the SSL mode to use; use

-

Save the file.

-

-

Build the application with gcc or clang.

g++ -std=c++17 sample-app.cpp -o sample-app -lpqxx -lpq \ -I<path-to-libpq>/libpq/include -I<path-to-libpqxx>/libpqxx/include \ -L<path-to-libpq>/libpq/lib -L<path-to-libpqxx>/libpqxx/lib -

Replace

<path-to-libpq>with the path to the libpq installation, and<path-to-libpqxx>with the path to the libpqxx installation; for example,/usr/local/opt. -

Start the application.

$ ./sample-app

You should see output similar to the following:

>>>> Successfully connected to YugabyteDB!

>>>> Successfully created table DemoAccount.

>>>> Selecting accounts:

name = Jessica, age = 28, country = USA, balance = 10000

name = John, age = 28, country = Canada, balance = 9000

>>>> Transferred 800 between accounts.

>>>> Selecting accounts:

name = Jessica, age = 28, country = USA, balance = 9200

name = John, age = 28, country = Canada, balance = 9800

You have successfully executed a basic C++ application that works with YugabyteDB.

The C# application connects to a YugabyteDB cluster using the Npgsql driver and performs basic SQL operations. Use the application as a template to get started with YugabyteDB in C#.

The application requires the following:

- .NET 6.0 SDK or later.

To build and run the application, do the following:

-

Clone the sample application to your computer:

git clone https://github.com/YugabyteDB-Samples/yugabyte-simple-csharp-app.git && cd yugabyte-simple-csharp-appThe

yugabyte-simple-csharp-app.csprojfile includes the following package reference to include the driver:<PackageReference Include="npgsql" Version="6.0.3" /> -

Provide connection parameters.

(You can skip this step and use the defaults if your cluster is running locally and listening on 127.0.0.1:5433.)

The application needs to establish a connection to the YugabyteDB cluster. To do this:

-

Open the

sample-app.csfile. -

Set the following configuration-related parameters:

- urlBuilder.Host - the host name of your YugabyteDB cluster. For local clusters, use the default (127.0.0.1). For YugabyteDB Aeon, select your cluster on the Clusters page, and click Settings. The host is displayed under Connection Parameters.

- urlBuilder.Port - the port number for the driver to use (the default YugabyteDB YSQL port is 5433).

- urlBuilder.Database - the name of the database you are connecting to (the default is

yugabyte). - urlBuilder.Username and urlBuilder.Password - the username and password for the YugabyteDB database. For local clusters, use the defaults (

yugabyte). For YugabyteDB Aeon, use the credentials in the credentials file you downloaded.

-

YugabyteDB Aeon requires SSL connections, so you need to set the following additional parameters:

- urlBuilder.SslMode - the SSL mode to use; use

SslMode.VerifyFull. - urlBuilder.RootCertificate - the full path to the YugabyteDB Aeon cluster CA certificate.

- urlBuilder.SslMode - the SSL mode to use; use

-

Save the file.

-

-

Build and run the application.

dotnet run

You should see output similar to the following:

>>>> Successfully connected to YugabyteDB!

>>>> Successfully created table DemoAccount.

>>>> Selecting accounts:

name = Jessica, age = 28, country = USA, balance = 10000

name = John, age = 28, country = Canada, balance = 9000

>>>> Transferred 800 between accounts.

>>>> Selecting accounts:

name = Jessica, age = 28, country = USA, balance = 9200

name = John, age = 28, country = Canada, balance = 9800

You have successfully executed a basic C# application that works with YugabyteDB.

The Ruby application connects to a YugabyteDB cluster using the Ruby Pg driver and performs basic SQL operations. Use the application as a template to get started with YugabyteDB in Ruby.

The application requires the following:

-

Ruby 3.1 or later.

-

OpenSSL 1.1.1 or later (used by libpq and pg to establish secure SSL connections).

-

libpq. Homebrew users on macOS can install using

brew install libpq. You can download the PostgreSQL binaries and source from PostgreSQL Downloads. -

Ruby pg. To install Ruby pg, run the following command:

gem install pg -- --with-pg-include=<path-to-libpq>/libpq/include --with-pg-lib=<path-to-libpq>/libpq/libReplace

<path-to-libpq>with the path to the libpq installation; for example,/usr/local/opt.

To build and run the application, do the following:

-

Clone the sample application to your computer:

git clone https://github.com/YugabyteDB-Samples/yugabyte-simple-ruby-app.git && cd yugabyte-simple-ruby-app -

Provide connection parameters.

(You can skip this step and use the defaults if your cluster is running locally and listening on 127.0.0.1:5433.)

The application needs to establish a connection to the YugabyteDB cluster. To do this:

-

Open the

sample-app.rbfile. -

Set the following configuration-related parameters:

- host - the host name of your YugabyteDB cluster. For local clusters, use the default (127.0.0.1). For YugabyteDB Aeon, select your cluster on the Clusters page, and click Settings. The host is displayed under Connection Parameters.

- port - the port number for the driver to use (the default YugabyteDB YSQL port is 5433).

- dbname - the name of the database you are connecting to (the default is

yugabyte). - user and password - the username and password for the YugabyteDB database. For local clusters, use the defaults (

yugabyte). For YugabyteDB Aeon, use the credentials in the credentials file you downloaded.

-

YugabyteDB Aeon requires SSL connections, so you need to set the following additional parameters:

- sslmode - the SSL mode to use; use

verify-full. - sslrootcert - the full path to the YugabyteDB Aeon cluster CA certificate.

- sslmode - the SSL mode to use; use

-

Save the file.

-

-

Make the application file executable.

chmod +x sample-app.rb -

Run the application.

$ ./sample-app.rb

You should see output similar to the following:

>>>> Successfully connected to YugabyteDB!

>>>> Successfully created table DemoAccount.

>>>> Selecting accounts:

name = Jessica, age = 28, country = USA, balance = 10000

name = John, age = 28, country = Canada, balance = 9000

>>>> Transferred 800 between accounts.

>>>> Selecting accounts:

name = Jessica, age = 28, country = USA, balance = 9200

name = John, age = 28, country = Canada, balance = 9800

You have successfully executed a basic Ruby application that works with YugabyteDB.

The Rust application connects to a YugabyteDB cluster using the Rust-Postgres driver and performs basic SQL operations. Use the application as a template to get started with YugabyteDB in Rust.

The application requires the following:

- Rust development environment. The sample application was created for Rust 1.58 but should work for earlier and later versions.

To build and run the application, do the following:

-

Clone the sample application to your computer:

git clone https://github.com/YugabyteDB-Samples/yugabyte-simple-rust-app.git && cd yugabyte-simple-rust-app -

Provide connection parameters.

(You can skip this step and use the defaults if your cluster is running locally and listening on 127.0.0.1:5433.)

The application needs to establish a connection to the YugabyteDB cluster. To do this:

-

Open the

sample-app.rsfile in thesrcdirectory. -

Set the following configuration-related constants:

- HOST - the host name of your YugabyteDB cluster. For local clusters, use the default (127.0.0.1). For YugabyteDB Aeon, select your cluster on the Clusters page, and click Settings. The host is displayed under Connection Parameters.

- PORT - the port number for the driver to use (the default YugabyteDB YSQL port is 5433).

- DB_NAME - the name of the database you are connecting to (the default is

yugabyte). - USER and PASSWORD - the username and password for the YugabyteDB database. For local clusters, use the defaults (

yugabyte). For YugabyteDB Aeon, use the credentials in the credentials file you downloaded.

-

YugabyteDB Aeon requires SSL connections, so you need to set the following additional parameters:

- SSL_MODE - the SSL mode to use; use

SslMode::Require. - SSL_ROOT_CERT - the full path to the YugabyteDB Aeon cluster CA certificate.

- SSL_MODE - the SSL mode to use; use

-

Save the file.

-

-

Build and run the application.

$ cargo run

The driver is included in the dependencies list of the Cargo.toml file and installed automatically the first time you run the application.

You should see output similar to the following:

>>>> Successfully connected to YugabyteDB!

>>>> Successfully created table DemoAccount.

>>>> Selecting accounts:

name = Jessica, age = 28, country = USA, balance = 10000

name = John, age = 28, country = Canada, balance = 9000

>>>> Transferred 800 between accounts.

>>>> Selecting accounts:

name = Jessica, age = 28, country = USA, balance = 9200

name = John, age = 28, country = Canada, balance = 9800

You have successfully executed a basic Rust application that works with YugabyteDB.

The PHP application connects to a YugabyteDB cluster using the php-pgsql driver and performs basic SQL operations. Use the application as a template to get started with YugabyteDB in PHP.

The application requires the following:

- PHP runtime. The sample application was created using PHP 8.1 but should work with earlier and later versions. Homebrew users on macOS can install PHP using

brew install php. - php-pgsql driver.

- On macOS, Homebrew automatically installs the driver with

brew install php. - Ubuntu users can install the driver using the

sudo apt-get install php-pgsqlcommand. - CentOS users can install the driver using the

sudo yum install php-pgsqlcommand.

- On macOS, Homebrew automatically installs the driver with

To build and run the application, do the following:

-

Clone the sample application to your computer:

git clone https://github.com/YugabyteDB-Samples/yugabyte-simple-php-app.git && cd yugabyte-simple-php-app -

Provide connection parameters.

(You can skip this step and use the defaults if your cluster is running locally and listening on 127.0.0.1:5433.)

The application needs to establish a connection to the YugabyteDB cluster. To do this:

-

Open the

sample-app.phpfile. -

Set the following configuration-related constants:

- HOST - the host name of your YugabyteDB cluster. For local clusters, use the default (127.0.0.1). For YugabyteDB Aeon, select your cluster on the Clusters page, and click Settings. The host is displayed under Connection Parameters.

- PORT - the port number for the driver to use (the default YugabyteDB YSQL port is 5433).

- DB_NAME - the name of the database to connect to (the default is

yugabyte). - USER and PASSWORD - the username and password for the YugabyteDB database. For local clusters, use the defaults (

yugabyte). For YugabyteDB Aeon, use the credentials in the credentials file you downloaded.

-

YugabyteDB Aeon requires SSL connections, so you need to set the following additional parameters:

- SSL_MODE - the SSL mode to use; use

verify-full. - SSL_ROOT_CERT - the full path to the YugabyteDB Aeon cluster CA certificate.

- SSL_MODE - the SSL mode to use; use

-

Save the file.

-

-

Run the application.

$ php sample-app.php

You should see output similar to the following:

>>>> Successfully connected to YugabyteDB!

>>>> Successfully created table DemoAccount.

>>>> Selecting accounts:

name = Jessica, age = 28, country = USA, balance = 10000

name = John, age = 28, country = Canada, balance = 9000

>>>> Transferred 800 between accounts.

>>>> Selecting accounts:

name = Jessica, age = 28, country = USA, balance = 9200

name = John, age = 28, country = Canada, balance = 9800

You have successfully executed a basic PHP application that works with YugabyteDB.

Migrate from PostgreSQL

For PostgreSQL users seeking to transition to a modern, horizontally scalable database solution with built-in resilience, YugabyteDB offers a seamless lift-and-shift approach that ensures compatibility with PostgreSQL syntax and features while providing the scalability benefits of distributed SQL.

YugabyteDB enables midsize applications running on single-node instances to effortlessly migrate to a fully distributed database environment. As applications grow, YugabyteDB seamlessly transitions to distributed mode, allowing for massive scaling capabilities.

YugabyteDB Voyager simplifies the end-to-end database migration process, including cluster setup, schema migration, and data migration. It supports migrating data from PostgreSQL, MySQL, and Oracle databases to various YugabyteDB offerings, including Aeon, Anywhere, and the core open-source database.

You can install YugabyteDB Voyager on different operating systems such as RHEL, Ubuntu, macOS, or deploy it via Docker or Airgapped installations.

In addition to offline migration, the latest release of YugabyteDB Voyager introduces live, non-disruptive migration from PostgreSQL, along with new live migration workflows featuring fall-forward and fall-back capabilities.

Furthermore, Voyager previews a powerful migration assessment that scans existing applications and databases. This detailed assessment provides organizations with valuable insights into the readiness of their applications, data, and schema for migration, thereby accelerating modernization efforts.