Legacy provisioning

Legacy provisioning of on-premises nodes is deprecated. Provision your nodes using the node agent script.

In this mode, you manually install each prerequisite software component. Use this mode if you disallow SSH login to a root-privileged user at setup time and instead have a local sudo-privileged user with CLI access to the VM.

Your responsibility now (to meet prerequisites) is to provide a VM with the following pre-installed:

- Supported Linux OS with an SSH-enabled, root-privileged user. YugabyteDB Anywhere (YBA) uses this user to automatically perform additional Linux configuration, such as creating the

yugabyteuser, updating the file descriptor settings via ulimits, and so on. - Additional software

- Additional software for airgapped

| Save for later | To configure |

|---|---|

| VM IP addresses | On-premises provider |

Manually provision a VM

After you have created the VM, use the following instructions to provision the VM for use with an on-premises provider.

For each node VM, perform the following:

- Verify the Python version installed on the node

- Set up time synchronization

- Open incoming TCP ports

- Manually pre-provision the node

- Install Prometheus Node Exporter

- Enable yugabyte user processes to run after logout

- Install systemd-related database service unit files

- Install the node agent

After you have provisioned the nodes, you can proceed to Add instances to the on-prem provider.

Root-level systemd or cron

The following instructions use user-level systemd to provide the necessary access to system resources. Versions prior to v2.20 use root-level systemd or cron. If you have previously provisioned nodes for this provider using either root-level systemd or cron, you should use the same steps, as all nodes in a provider need to be provisioned in the same way. For instructions on provisioning using root-level systemd or cron, see the instructions for v2.18.Verify the python version installed on the node

Verify that Python 3.5-3.9 is installed on the node. v3.6 is recommended.

In case there is more than one Python 3 version installed, ensure that python3 refers to the right one. For example:

sudo alternatives --set python3 /usr/bin/python3.6

sudo alternatives --display python3

python3 -V

If you are using Python later than v3.6, install the selinux package corresponding to your version of python. For example, using pip, you can install as follows:

python3 -m pip install selinux

Refer to Ansible playbook fails with libselinux-python aren't installed on RHEL8 for more information.

If you are using Python later than v3.7, set the Max Python Version (exclusive) Global Configuration option to the python version. Refer to Manage runtime configuration settings. Note that only a Super Admin user can modify Global configuration settings.

Set up time synchronization

A local Network Time Protocol (NTP) server or equivalent must be available.

Ensure an NTP-compatible time service client is installed in the node OS (chrony is installed by default in the standard AlmaLinux 8 instance used in this example). Then, configure the time service client to use the available time server. The procedure includes this step and assumes chrony is the installed client.

Open incoming TCP/IP ports

Database servers need incoming TCP/IP access enabled for communications between themselves and YugabyteDB Anywhere.

Refer to Networking.

Pre-provision nodes manually

This process carries out all provisioning tasks on the database nodes which require elevated privileges. After the database nodes have been prepared in this way, the universe creation process from YugabyteDB Anywhere will connect with the nodes only via the yugabyte user, and not require any elevation of privileges to deploy and operate the YugabyteDB universe.

Physical nodes (or cloud instances) are installed with a standard AlmaLinux 8 server image. The following steps are to be performed on each physical node, prior to universe creation:

-

Log in to each database node as a user with sudo enabled (for example, the

ec2-useruser in AWS). -

Add the following line to the

/etc/chrony.conffile:server <your-time-server-IP-address> prefer iburstThen run the following command:

sudo chronyc makestep # (force instant sync to NTP server) -

Add a new

yugabyte:yugabyteuser and group with the default login shell/bin/bashthat you set via the-sflag, as follows:sudo useradd -u <yugabyte_user_uid> -s /bin/bash --create-home --home-dir <yugabyte_home> yugabyte # (add user yugabyte and create its home directory as specified in <yugabyte_home>) sudo passwd yugabyte # (add a password to the yugabyte user) sudo su - yugabyte # (change to yugabyte user for execution of next steps)-

yugabyte_user_uidis a common UID for theyugabyteuser across all nodes. Use the same UID for theyugabyteuser on all nodes in the same cluster. If you don't use a common UID, you may run into issues, for example, if you are using NFS for backups. -

yugabyte_homeis the path to the Yugabyte home directory. By default, this is/home/yugabyte. If you set a custom path for the yugabyte user's home in the YugabyteDB Anywhere UI, you must use the same path here. Otherwise, you can omit the--home-dirflag.

Ensure that the

yugabyteuser has permissions to SSH into the YugabyteDB nodes (as defined in/etc/ssh/sshd_config).If you are using a RHEL CIS hardened image and want SSH access to database nodes, you need to manually add the

yugabyteuser tosshd_config.sudo sed -i '/^AllowUsers / s/$/ yugabyte/' /etc/ssh/sshd_config sudo systemctl restart sshd -

-

If the node is running SELinux and the home directory is not the default, set the correct SELinux ssh context, as follows:

chcon -R -t ssh_home_t <yugabyte_home>The rest of this document assumes a home of

/home/yugabyte. If you set a custom path for home, change the path in the examples as appropriate. -

Copy the SSH public key to each DB node. This public key should correspond to the private key entered into the YugabyteDB Anywhere provider.

-

Run the following commands as the

yugabyteuser, after copying the SSH public key file to the user home directory:cd ~yugabyte mkdir .ssh chmod 700 .ssh cat <pubkey_file> >> .ssh/authorized_keys chmod 400 .ssh/authorized_keys exit # (exit from the yugabyte user back to previous user) -

Add the following lines to the

/etc/security/limits.conffile (sudo is required):* - core unlimited * - data unlimited * - fsize unlimited * - sigpending 119934 * - memlock 64 * - rss unlimited * - nofile 1048576 * - msgqueue 819200 * - stack 8192 * - cpu unlimited * - nproc 12000 * - locks unlimited -

Modify the following line in the

/etc/security/limits.d/20-nproc.conffile:* soft nproc 12000 -

Execute the following to tune kernel settings:

-

Configure the parameter

vm.swappinessas follows:sudo bash -c 'sysctl vm.swappiness=0 >> /etc/sysctl.conf' sudo sysctl kernel.core_pattern=/home/yugabyte/cores/core_%p_%t_%E -

Configure the parameter

vm.max_map_countas follows:sudo sysctl -w vm.max_map_count=262144 sudo bash -c 'sysctl vm.max_map_count=262144 >> /etc/sysctl.conf' -

Validate the change as follows:

sysctl vm.max_map_count

-

-

Perform the following to prepare and mount the data volume (separate partition for database data):

-

List the available storage volumes, as follows:

lsblk -

Perform the following steps for each available volume (all listed volumes other than the root volume):

sudo mkdir /data # (or /data1, /data2 etc) sudo mkfs -t xfs /dev/nvme1n1 # (create xfs filesystem over entire volume) sudo vi /etc/fstab -

Add the following line to

/etc/fstab:/dev/nvme1n1 /data xfs noatime 0 0 -

Exit from vi, and continue, as follows:

sudo mount -av # (mounts the new volume using the fstab entry, to validate) sudo chown yugabyte:yugabyte /data sudo chmod 755 /data

-

Custom tmp directory for CIS hardened RHEL 8 or 9

For CIS hardened RHEL 8 or 9, if you use the default /tmp directory, prechecks fail when deploying universes with an access denied error.

To resolve this, create a custom tmp directory called /new_tmp on the database nodes:

sudo mkdir -p /new_tmp; sudo chown yugabyte:yugabyte -R /new_tmp

In addition, after you create the on-premises provider, set the provider runtime configuration flag yb.filepaths.remoteTmpDirectory to /new_tmp.

Finally, when creating universes using the provider, set YB-Master and YB-TServer configuration flag tmp_dir to the custom /new_tmp directory.

Install Prometheus Node Exporter

Download the Prometheus Node Exporter, as follows:

wget https://github.com/prometheus/node_exporter/releases/download/v1.7.0/node_exporter-1.7.0.linux-amd64.tar.gz

If you are doing an airgapped installation, download the Node Exporter using a computer connected to the internet and copy it over to the database nodes.

On each node, perform the following as a user with sudo access:

-

Copy the

node_exporter-1.7.0.linux-amd64.tar.gzpackage file that you downloaded into the/tmpdirectory on each of the YugabyteDB nodes. Ensure that this file is readable by the user (for example,ec2-user). -

Run the following commands:

sudo mkdir /opt/prometheus sudo mkdir /etc/prometheus sudo mkdir /var/log/prometheus sudo mkdir /var/run/prometheus sudo mkdir -p /tmp/yugabyte/metrics sudo mv /tmp/node_exporter-1.7.0.linux-amd64.tar.gz /opt/prometheus sudo adduser --shell /bin/bash prometheus # (also adds group "prometheus") sudo chown -R prometheus:prometheus /opt/prometheus sudo chown -R prometheus:prometheus /etc/prometheus sudo chown -R prometheus:prometheus /var/log/prometheus sudo chown -R prometheus:prometheus /var/run/prometheus sudo chown -R yugabyte:yugabyte /tmp/yugabyte/metrics sudo chmod -R 755 /tmp/yugabyte/metrics sudo chmod +r /opt/prometheus/node_exporter-1.7.0.linux-amd64.tar.gz sudo su - prometheus (user session is now as user "prometheus") -

Run the following commands as user

prometheus:cd /opt/prometheus tar zxf node_exporter-1.7.0.linux-amd64.tar.gz exit # (exit from prometheus user back to previous user) -

Edit the following file:

sudo vi /etc/systemd/system/node_exporter.serviceAdd the following to the

/etc/systemd/system/node_exporter.servicefile:[Unit] Description=node_exporter - Exporter for machine metrics. Documentation=https://github.com/William-Yeh/ansible-prometheus After=network.target [Install] WantedBy=multi-user.target [Service] Type=simple User=prometheus Group=prometheus ExecStart=/opt/prometheus/node_exporter-1.7.0.linux-amd64/node_exporter --web.listen-address=:9300 --collector.textfile.directory=/tmp/yugabyte/metrics -

Exit from vi, and continue, as follows:

sudo systemctl daemon-reload sudo systemctl enable node_exporter sudo systemctl start node_exporter -

Check the status of the

node_exporterservice with the following command:sudo systemctl status node_exporter

Enable yugabyte user processes to run after logout

To enable services to run even when the yugabyte user is not logged in, run the following command as the yugabyte user:

loginctl enable-linger yugabyte

Then add the following to /home/yugabyte/.bashrc:

export XDG_RUNTIME_DIR=/run/user/$(id -u yugabyte)

Install systemd-related database service unit files

You can install systemd-specific database service unit files, as follows:

-

Create the directory

.config/systemd/userin the yugabyte home directory. For example:mkdir /home/yugabyte/.config/systemd/user -

Add the following service and timer files to the

/.config/systemd/userdirectory you created:yb-master.service[Unit] Description=Yugabyte master service Requires=network-online.target After=network.target network-online.target multi-user.target StartLimitInterval=100 StartLimitBurst=10 [Path] PathExists=/home/yugabyte/master/bin/yb-master PathExists=/home/yugabyte/master/conf/server.conf [Service] # Start ExecStart=/home/yugabyte/master/bin/yb-master --flagfile /home/yugabyte/master/conf/server.conf Restart=on-failure RestartSec=5 # Stop -> SIGTERM - 10s - SIGKILL (if not stopped) [matches existing cron behavior] KillMode=process TimeoutStopFailureMode=terminate KillSignal=SIGTERM TimeoutStopSec=10 FinalKillSignal=SIGKILL # Logs StandardOutput=syslog StandardError=syslog # ulimit LimitCORE=infinity LimitNOFILE=1048576 LimitNPROC=12000 [Install] WantedBy=default.targetyb-tserver.service[Unit] Description=Yugabyte tserver service Requires=network-online.target After=network.target network-online.target multi-user.target StartLimitInterval=100 StartLimitBurst=10 [Path] PathExists=/home/yugabyte/tserver/bin/yb-tserver PathExists=/home/yugabyte/tserver/conf/server.conf [Service] # Start ExecStart=/home/yugabyte/tserver/bin/yb-tserver --flagfile /home/yugabyte/tserver/conf/server.conf Restart=on-failure RestartSec=5 # Stop -> SIGTERM - 10s - SIGKILL (if not stopped) [matches existing cron behavior] KillMode=process TimeoutStopFailureMode=terminate KillSignal=SIGTERM TimeoutStopSec=10 FinalKillSignal=SIGKILL # Logs StandardOutput=syslog StandardError=syslog # ulimit LimitCORE=infinity LimitNOFILE=1048576 LimitNPROC=12000 [Install] WantedBy=default.targetyb-zip_purge_yb_logs.service[Unit] Description=Yugabyte logs Wants=yb-zip_purge_yb_logs.timer [Service] Type=oneshot WorkingDirectory=/home/yugabyte/bin ExecStart=/bin/sh /home/yugabyte/bin/zip_purge_yb_logs.sh [Install] WantedBy=multi-user.targetyb-zip_purge_yb_logs.timer[Unit] Description=Yugabyte logs Requires=yb-zip_purge_yb_logs.service [Timer] Unit=yb-zip_purge_yb_logs.service # Run hourly at minute 0 (beginning) of every hour OnCalendar=00/1:00 [Install] WantedBy=timers.targetyb-clean_cores.service[Unit] Description=Yugabyte clean cores Wants=yb-clean_cores.timer [Service] Type=oneshot WorkingDirectory=/home/yugabyte/bin ExecStart=/bin/sh /home/yugabyte/bin/clean_cores.sh [Install] WantedBy=multi-user.targetyb-controller.service[Unit] Description=Yugabyte Controller Requires=network-online.target After=network.target network-online.target multi-user.target StartLimitInterval=100 StartLimitBurst=10 [Path] PathExists=/home/yugabyte/controller/bin/yb-controller-server PathExists=/home/yugabyte/controller/conf/server.conf [Service] # Start ExecStart=/home/yugabyte/controller/bin/yb-controller-server \ --flagfile /home/yugabyte/controller/conf/server.conf Restart=always RestartSec=5 # Stop -> SIGTERM - 10s - SIGKILL (if not stopped) [matches existing cron behavior] KillMode=control-group TimeoutStopFailureMode=terminate KillSignal=SIGTERM TimeoutStopSec=10 FinalKillSignal=SIGKILL # Logs StandardOutput=syslog StandardError=syslog # ulimit LimitCORE=infinity LimitNOFILE=1048576 LimitNPROC=12000 [Install] WantedBy=default.targetyb-clean_cores.timer[Unit] Description=Yugabyte clean cores Requires=yb-clean_cores.service [Timer] Unit=yb-clean_cores.service # Run every 10 minutes offset by 5 (5, 15, 25...) OnCalendar=*:0/10:30 [Install] WantedBy=timers.targetyb-collect_metrics.service[Unit] Description=Yugabyte collect metrics Wants=yb-collect_metrics.timer [Service] Type=oneshot WorkingDirectory=/home/yugabyte/bin ExecStart=/bin/bash /home/yugabyte/bin/collect_metrics_wrapper.sh [Install] WantedBy=multi-user.targetyb-collect_metrics.timer[Unit] Description=Yugabyte collect metrics Requires=yb-collect_metrics.service [Timer] Unit=yb-collect_metrics.service # Run every 1 minute OnCalendar=*:0/1:0 [Install] WantedBy=timers.targetyb-bind_check.service[Unit] Description=Yugabyte IP bind check Requires=network-online.target After=network.target network-online.target multi-user.target Before=yb-controller.service yb-tserver.service yb-master.service yb-collect_metrics.timer StartLimitInterval=100 StartLimitBurst=10 [Path] PathExists=/home/yugabyte/controller/bin/yb-controller-server PathExists=/home/yugabyte/controller/conf/server.conf [Service] # Start ExecStart=/home/yugabyte/controller/bin/yb-controller-server \ --flagfile /home/yugabyte/controller/conf/server.conf \ --only_bind --logtostderr Type=oneshot KillMode=control-group KillSignal=SIGTERM TimeoutStopSec=10 # Logs StandardOutput=syslog StandardError=syslog [Install] WantedBy=default.target

Ulimits on Red Hat Enterprise Linux 8

On Red Hat Enterprise Linux 8-based systems (Red Hat Enterprise Linux 8, Oracle Enterprise Linux 8.x, Amazon Linux 2), additionally, add the following line to /etc/systemd/system.conf and /etc/systemd/user.conf:

DefaultLimitNOFILE=1048576

You must reboot the system for these two settings to take effect.

Install node agent

The YugabyteDB Anywhere node agent is used to manage communication between YugabyteDB Anywhere and the node. When node agent is installed, YugabyteDB Anywhere no longer requires SSH or sudo access to nodes. For more information, refer to Node agent FAQ.

For automated and assisted manual provisioning, node agents are installed onto instances automatically when adding instances, or when running the pre-provisioning script using the --install_node_agent flag.

Use the following procedure to install node agent for fully manual provisioning.

To install the node agent manually, as the yugabyte user, do the following:

-

If you are re-provisioning the node (for example, you are patching the Linux operating system, where node agent has previously been installed on the node), you need to unregister the node agent before installing node agent.

-

Download the installer using the YugabyteDB Anywhere API token of the Super Admin. Run the following command from your YugabyteDB nodes:

curl https://<yugabytedb_anywhere_address>/api/v1/node_agents/download --fail --header 'X-AUTH-YW-API-TOKEN: <api_token>' > installer.sh && chmod +x installer.shTo create an API token, navigate to your User Profile and click Generate Key.

-

Run the following command to download the node agent's

.tgzfile which installs and starts the interactive configuration:./installer.sh -c install -u https://<yba_address> -t <api_token>For example, if you run the following:

# To ignore verification of YBA certificate, add --skip_verify_cert. ./installer.sh -c install -u https://10.98.0.42 -t 301fc382-cf06-4a1b-b5ef-0c8c45273aefYou should get output similar to the following:

* Starting YB Node Agent install * Creating Node Agent Directory * Changing directory to node agent * Creating Sub Directories * Downloading YB Node Agent build package * Getting Linux/amd64 package * Downloaded Version - 2.17.1.0-PRE_RELEASE * Extracting the build package * The current value of Node IP is not set; Enter new value or enter to skip: 10.9.198.2 * The current value of Node Name is not set; Enter new value or enter to skip: Test * Select your Onprem Provider 1. Provider ID: 41ac964d-1db2-413e-a517-2a8d840ff5cd, Provider Name: onprem Enter the option number: 1 * Select your Instance Type 1. Instance Code: c5.large Enter the option number: 1 * Select your Region 1. Region ID: dc0298f6-21bf-4f90-b061-9c81ed30f79f, Region Code: us-west-2 Enter the option number: 1 * Select your Zone 1. Zone ID: 99c66b32-deb4-49be-85f9-c3ef3a6e04bc, Zone Name: us-west-2c Enter the option number: 1 • Completed Node Agent Configuration • Node Agent Registration Successful You can install a systemd service on linux machines by running sudo node-agent-installer.sh -c install_service --user yugabyte (Requires sudo access). -

Run the

install_servicecommand as a sudo user:sudo node-agent-installer.sh -c install_service --user yugabyteThis installs node agent as a systemd service. This is required so that the node agent can perform self-upgrade, database installation and configuration, and other functions.

When the installation has been completed, the configurations are saved in the config.yml file located in the node-agent/config/ directory. You should refrain from manually changing values in this file.

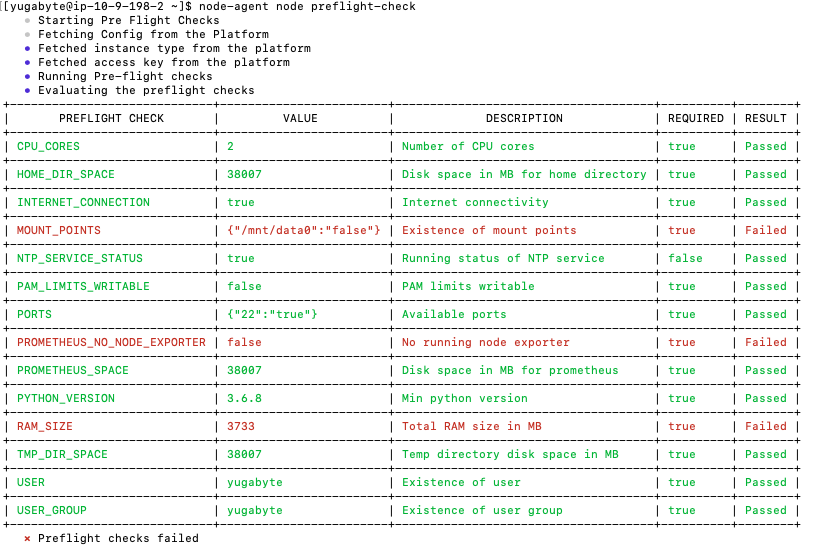

Preflight check

After the node agent is installed, configured, and connected to YugabyteDB Anywhere, you can perform a series of preflight checks without sudo privileges by using the following command:

node-agent node preflight-check

The result of the check is forwarded to YugabyteDB Anywhere for validation. The validated information is posted in a tabular form on the terminal. If there is a failure against a required check, you can apply a fix and then rerun the preflight check.

Expect an output similar to the following:

If the preflight check is successful, you add the node to the provider (if required) by executing the following:

node-agent node preflight-check --add_node

Reconfigure a node agent

If you want to use a node that has already been provisioned in a different provider, you can reconfigure the node agent.

To reconfigure a node for use in a different provider, do the following:

-

Remove the node instance from the provider using the following command:

node-agent node delete-instance -

Run the

configurecommand to start the interactive configuration. This also registers the node agent with YBA.node-agent node configure -t <api_token> -u https://<yba_address>For example, if you run the following:

node-agent node configure -t 1ba391bc-b522-4c18-813e-71a0e76b060a -u https://10.98.0.42* The current value of Node Name is set to node1; Enter new value or enter to skip: * The current value of Node IP is set to 10.9.82.61; Enter new value or enter to skip: * Select your Onprem Manually Provisioned Provider. 1. Provider ID: b56d9395-1dda-47ae-864b-7df182d07fa7, Provider Name: onprem-provision-test1 * The current value is Provider ID: b56d9395-1dda-47ae-864b-7df182d07fa7, Provider Name: onprem-provision-test1. Enter new option number or enter to skip: * Select your Instance Type. 1. Instance Code: c5.large * The current value is Instance Code: c5.large. Enter new option number or enter to skip: * Select your Region. 1. Region ID: 0a185358-3de0-41f2-b106-149be3bf07dd, Region Code: us-west-2 * The current value is Region ID: 0a185358-3de0-41f2-b106-149be3bf07dd, Region Code: us-west-2. Enter new option number or enter to skip: * Select your Zone. 1. Zone ID: c9904f64-a65b-41d3-9afb-a7249b2715d1, Zone Code: us-west-2a * The current value is Zone ID: c9904f64-a65b-41d3-9afb-a7249b2715d1, Zone Code: us-west-2a. Enter new option number or enter to skip: • Completed Node Agent Configuration • Node Agent Registration Successful

If you are running v2.18.5 or earlier, the node must be unregistered first. Use the following procedure:

-

If the node instance has been added to a provider, remove the node instance from the provider.

-

Stop the systemd service as a sudo user.

sudo systemctl stop yb-node-agent -

Run the

configurecommand to start the interactive configuration. This also registers the node agent with YBA.node-agent node configure -t <api_token> -u https://<yba_address> -

Start the Systemd service as a sudo user.

sudo systemctl start yb-node-agent -

Verify that the service is up.

sudo systemctl status yb-node-agent -

Run preflight checks and add the node as

yugabyteuser.node-agent node preflight-check --add_node

Unregister node agent

When performing some tasks, you may need to unregister the node agent from a node.

To unregister node agent, run the following command:

node-agent node unregister

After running this command, YBA no longer recognizes the node agent.

If the node agent configuration is corrupted, the command may fail. In this case, unregister the node agent using the API as follows:

-

Obtain the node agent ID:

curl -k --header 'X-AUTH-YW-API-TOKEN:<api_token>' https://<yba_address>/api/v1/customers/<customer_id>/node_agents?nodeIp=<node_agent_ip>You should see output similar to the following:

[{ "uuid":"ec7654b1-cf5c-4a3b-aee3-b5e240313ed2", "name":"node1", "ip":"10.9.82.61", "port":9070, "customerUuid":"f33e3c9b-75ab-4c30-80ad-cba85646ea39", "version":"2.18.6.0-PRE_RELEASE", "state":"READY", "updatedAt":"2023-12-19T23:56:43Z", "config":{ "certPath":"/opt/yugaware/node-agent/certs/f33e3c9b-75ab-4c30-80ad-cba85646ea39/ec7654b1-cf5c-4a3b-aee3-b5e240313ed2/0", "offloadable":false }, "osType":"LINUX", "archType":"AMD64", "home":"/home/yugabyte/node-agent", "versionMatched":true, "reachable":false }] -

Use the value of the field

uuidas<node_agent_id>in the following command:curl -k -X DELETE --header 'X-AUTH-YW-API-TOKEN:<api_token>' https://<yba_address>/api/v1/customers/<customer_id>/node_agents/<node_agent_id>