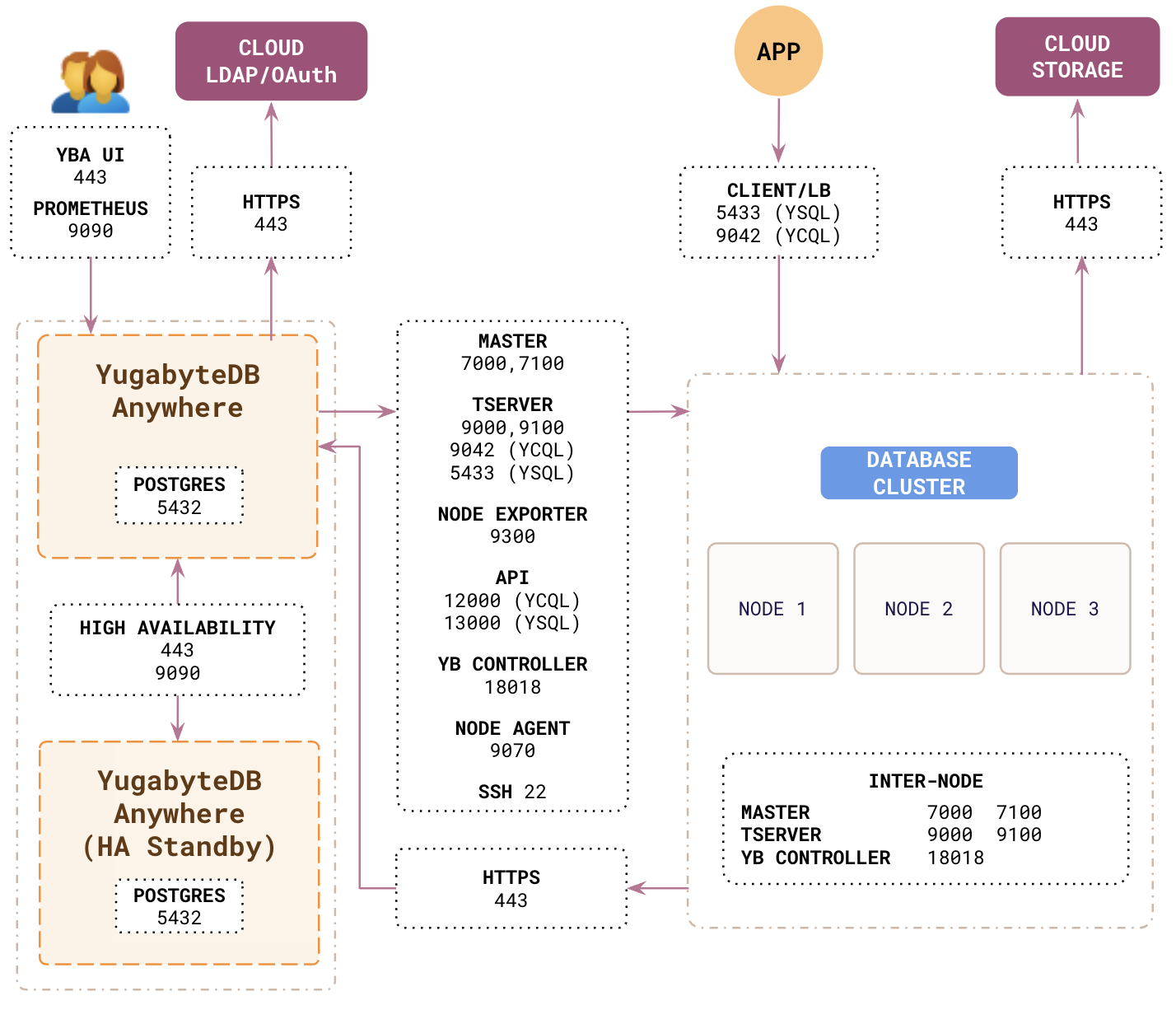

Networking requirements

YugabyteDB Anywhere (YBA) needs to be able to access nodes that will be used to create universes, and the nodes that make up universes need to be accessible to each other and to applications.

Global port requirements

The following ports need to be open.

| From | To | Requirements |

|---|---|---|

| DB nodes | DB nodes | Open the following ports for communication between nodes in clusters. They do not need to be exposed to your application. For universes with Node-to-Node encryption in transit, communication over these ports is encrypted.

|

| YBA node | DB nodes | Open the following ports on database cluster nodes so that YugabyteDB Anywhere can provision them.

|

| Application | DB nodes | Open the following ports on database cluster nodes so that applications can connect via APIs. For universes with Client-to-Node encryption in transit, communication over these ports is encrypted. Universes can also be configured with database authorization and authentication to manage access.

|

| DB nodes | YBA node | Open the following port on the YugabyteDB Anywhere node so that node agents can communicate.

|

| Operator | YBA node | Open the following ports on the YugabyteDB Anywhere node so that administrators can access the YBA UI and monitor the system and node metrics. These ports are also used by standby YBA instances in high availability setups.

|

| DB nodes YBA node |

Storage | Database clusters must be allowed to contact backup storage (such as AWS S3, GCP GCS, Azure blob).

|

Networking for xCluster

When two database clusters are connected via xCluster replication, you need to ensure that the yb-master and yb-tserver RPC ports (default 7100 and 9100 respectively) are open in both directions between all nodes in both clusters.

Overriding default port assignments

When deploying a universe, you can customize the following ports:

- YB-Master HTTP(S)

- YB-Master RPC

- YB-TServer HTTP(S)

- YB-TServer RPC

- YSQL server

- YSQL API

- YCQL server

- YCQL API

- Prometheus Node Exporter HTTP

If you intend to customize these port numbers, replace the default port assignments with the values identifying the port that each process should use. Any value from 1024 to 65535 is valid, as long as this value does not conflict with anything else running on nodes to be provisioned.

Provider-specific requirements

In on-premises environments, both network and local firewalls on the VM (such as iptables or ufw) need to be configured for the required networking.

In AWS, you need to identify the specific Virtual Private Cloud (VPCs) and subnets in the regions in which YBA and database VMs will be deployed, and the security groups you intend to use for these VMs.

When YBA and database nodes are all deployed in the same VPC, achieving the required network configuration primarily involves setting up the appropriate security group(s).

However, if YBA and database nodes are in different VPCs (for example, in a multi-region cluster), the setup is more complex. Use VPC peering to open the required ports, in addition to setting up the appropriate security groups.

In the VPC peering approach, peering connections must be established in an N x N matrix, such that every VPC in every region you configure is peered to every other VPC in every other region. Routing table entries in every regional VPC should route traffic to every other VPC CIDR block across the PeeringConnection to that respective VPC. Security groups in each VPC can be hardened by only opening up the relevant ports to the CIDR blocks of the VPCs from which you are expecting traffic.

If you deploy YBA in a different VPC than the ones in which you intend to deploy database nodes, then the YBA VPC must also be part of this VPC mesh, and you need to set up routing table entries in the source VPC (YBA) and allow one further CIDR block (or public IP) ingress rule on the security groups for the YugabyteDB nodes (to allow traffic from YBA or its VPC).

When creating the AWS provider, YBA needs to know whether the database nodes will have Internet access, or be airgapped. If your nodes will have Internet access, you must do one of the following:

-

Assign public IP addresses to the VMs.

-

Set up a NAT gateway. You must configure the NAT gateway before creating the VPC. For more information, see NAT and Creating a VPC with public and private subnets in the AWS documentation.

In GCP, YBA deploys all database cluster VMs in a single GCP VPC. You need to identify the VPC and specific subnets for the regions in which VMs are deployed and configure the appropriate GCP firewall rules to open the required ports.

Configure firewall rules to allow YBA to communicate with database nodes, and database nodes to communicate with each other, as per the network diagram.

If the YBA VM and the database cluster VMs are deployed in separate GCP VPCs, you must also peer these VPCs to enable the required connectivity. Typically, routes for the peered VPCs are set up automatically but may need to be configured explicitly in some cases.

When creating the GCP provider, YBA needs to know whether the database nodes will have Internet access, or be airgapped. If your nodes will have Internet access, you must do one of the following:

-

Assign public IP addresses to the VMs.

-

Set up a NAT gateway. You must configure the NAT gateway before creating the VPC. For more information, see network address translation (NAT) gateway in the GCP documentation.

In Azure, you will need to identify the specific Virtual Networks and subnets for the regions in which the YBA and database VMs are deployed and the (optional) network security groups (NSG) intended to be associated with these VMs.

When YBA and database cluster nodes are all deployed in the same VPC, configuring the network requirements involves setting up the appropriate network security groups (NSGs) to allow the network configuration. NSGs can either be set up on the subnets directly or specified to YBA for associating with the database cluster VMs.

When YBA and database nodes are deployed in different VPCs (for example, in a multi-region universe), the setup is more complex. Use VPC peering, as well as setting up the appropriate NSGs. In the VPC peering approach, peering connections must be established in an N x N matrix, such that every VPC in every region you configure must be peered to every other VPC in every other region. Routes for the peering connections can be automatically configured by Azure or configured manually. If you deploy YBA in a different VPC than where you intend to deploy database VMs, then this VPC must also be part of the VPC mesh.