Handling region failures

YugabyteDB is resilient to a single-domain failure in a deployment with a replication factor (RF) of 3. To survive region failures, you deploy across multiple regions. Let's see how YugabyteDB survives a region failure.

Setup

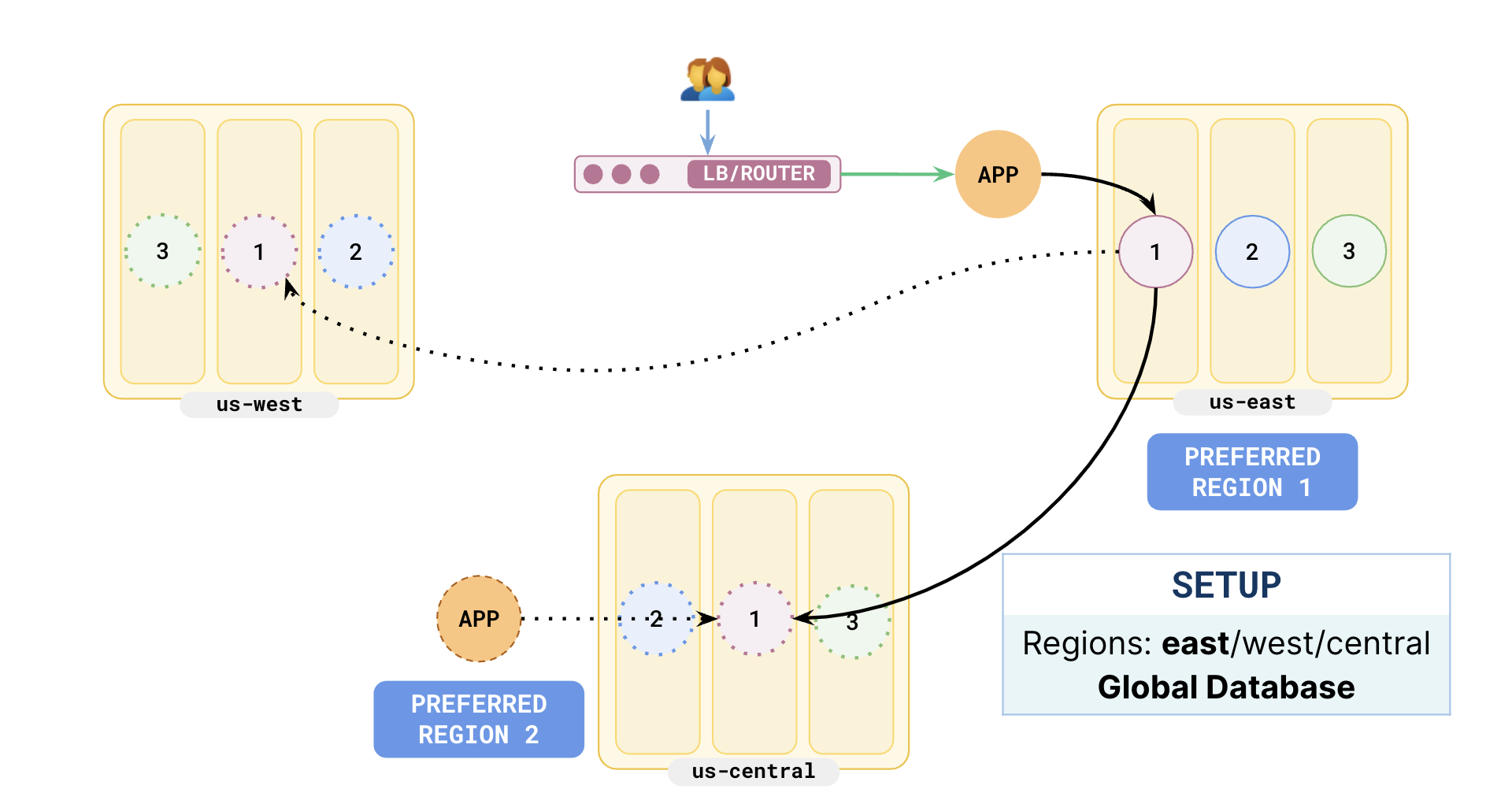

Consider a scenario where you have deployed your database across three regions - us-west, us-east, and us-central. Typically, you choose one region as the preferred region for your database. This is the region where your applications are active. Then determine which region is closest to the preferred to be the failover region for your applications, and set this region as your second preferred region for the database. The third region needs no explicit setting and automatically becomes the third preferred region.

Set up a local cluster

If a local universe is currently running, first destroy it.

Start a local three-node universe with an RF of 3 by first creating a single node, as follows:

./bin/yugabyted start \

--advertise_address=127.0.0.1 \

--base_dir=${HOME}/var/node1 \

--cloud_location=aws.us-east.us-east-1a

On macOS, the additional nodes need loopback addresses configured, as follows:

sudo ifconfig lo0 alias 127.0.0.2

sudo ifconfig lo0 alias 127.0.0.3

Next, join more nodes with the previous node as needed. yugabyted automatically applies a replication factor of 3 when a third node is added.

Start the second node as follows:

./bin/yugabyted start \

--advertise_address=127.0.0.2 \

--base_dir=${HOME}/var/node2 \

--cloud_location=aws.us-central.us-central-1a \

--join=127.0.0.1

Start the third node as follows:

./bin/yugabyted start \

--advertise_address=127.0.0.3 \

--base_dir=${HOME}/var/node3 \

--cloud_location=aws.us-west.us-west-1a \

--join=127.0.0.1

After starting the yugabyted processes on all the nodes, configure the data placement constraint of the universe, as follows:

./bin/yugabyted configure data_placement --base_dir=${HOME}/var/node1 --fault_tolerance=region

This command can be executed on any node where you already started YugabyteDB.

To check the status of a running multi-node universe, run the following command:

./bin/yugabyted status --base_dir=${HOME}/var/node1

Setup

To set up a universe, refer to Create a multi-zone universe.In the following illustration, the leaders are in us-east (the preferred region), which is also where the applications are active. The standby application is in us-central and will be the failover. This has been set as the second preferred region for the database

Users reach the application via a router/load balancer which would point to the active application in us-east.

Now when the application writes, the leaders replicate to both the followers in us-west and us-central.

As us-central is closer to us-east than us-west, the followers in central will acknowledge the write faster, so that follower will be up-to-date with the leader in us-east.

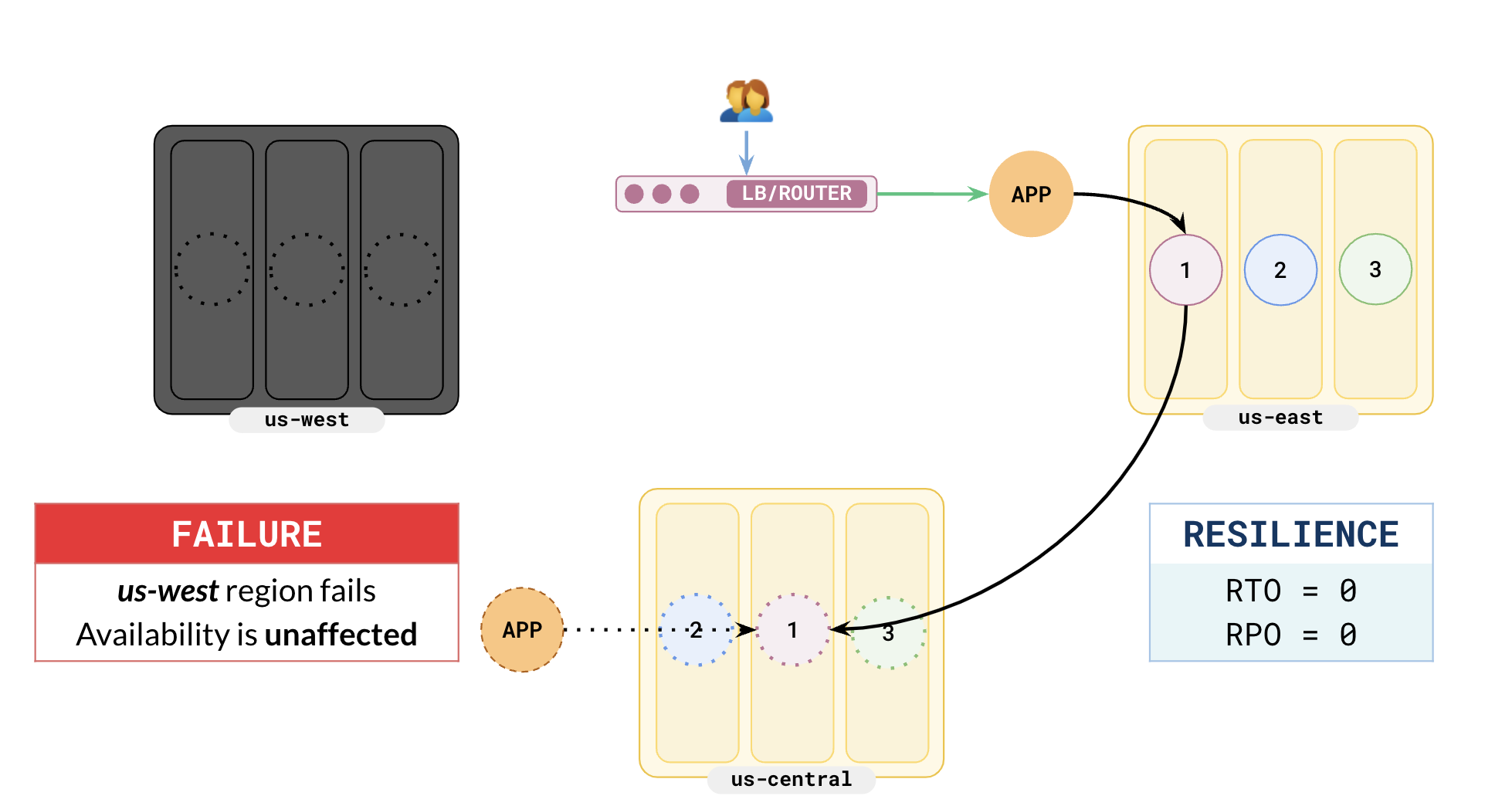

Third region failure

If the third (least preferred) region fails, availability is not affected at all. This is because the leaders will be in the preferred region, and there will be one follower in the second preferred region. There is no data loss and no recovery is needed.

Simulate failure of the third region locally

To simulate the failure of the 3rd region locally, you can just stop the third node.

./bin/yugabyted stop --base_dir=${HOME}/var/node3

The following illustration shows the scenario of the third region (us-west) region failing. As us-east has been set as the preferred region, there are no leaders in us-west. When this region fails, availability of the applications is not affected at all as there is still one follower active (in us-central) for replication to continue correctly.

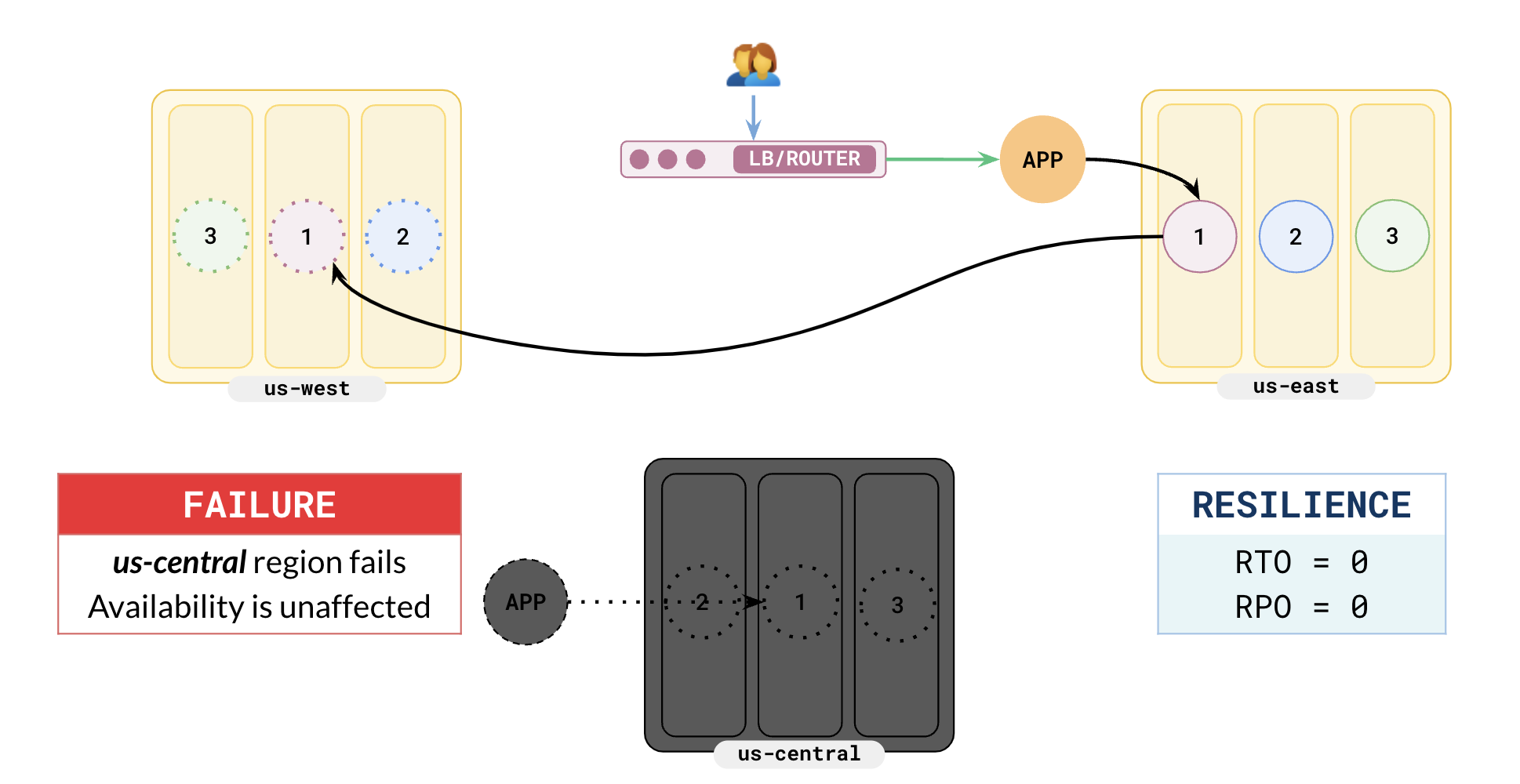

Secondary region failure

When the second preferred region fails, availability is not affected at all. This is because there are no leaders in this region - all the leaders are in the preferred region. But your write latency could be affected as every write to the leader has to wait for acknowledgment from the third region, which is farther away. Reads are not affected as the primary application will read from the leaders in the preferred region. There is no data loss at all.

Simulate failure of the secondary region locally

To simulate the failure of the secondary region locally, you can just stop the second node.

./bin/yugabyted stop --base_dir=${HOME}/var/node2

In the following illustration, you can see that as us-central has failed, writes are replicated to us-west. This leads to higher write latencies as us-west is farther away from us-east than us-central. But there is no data loss.

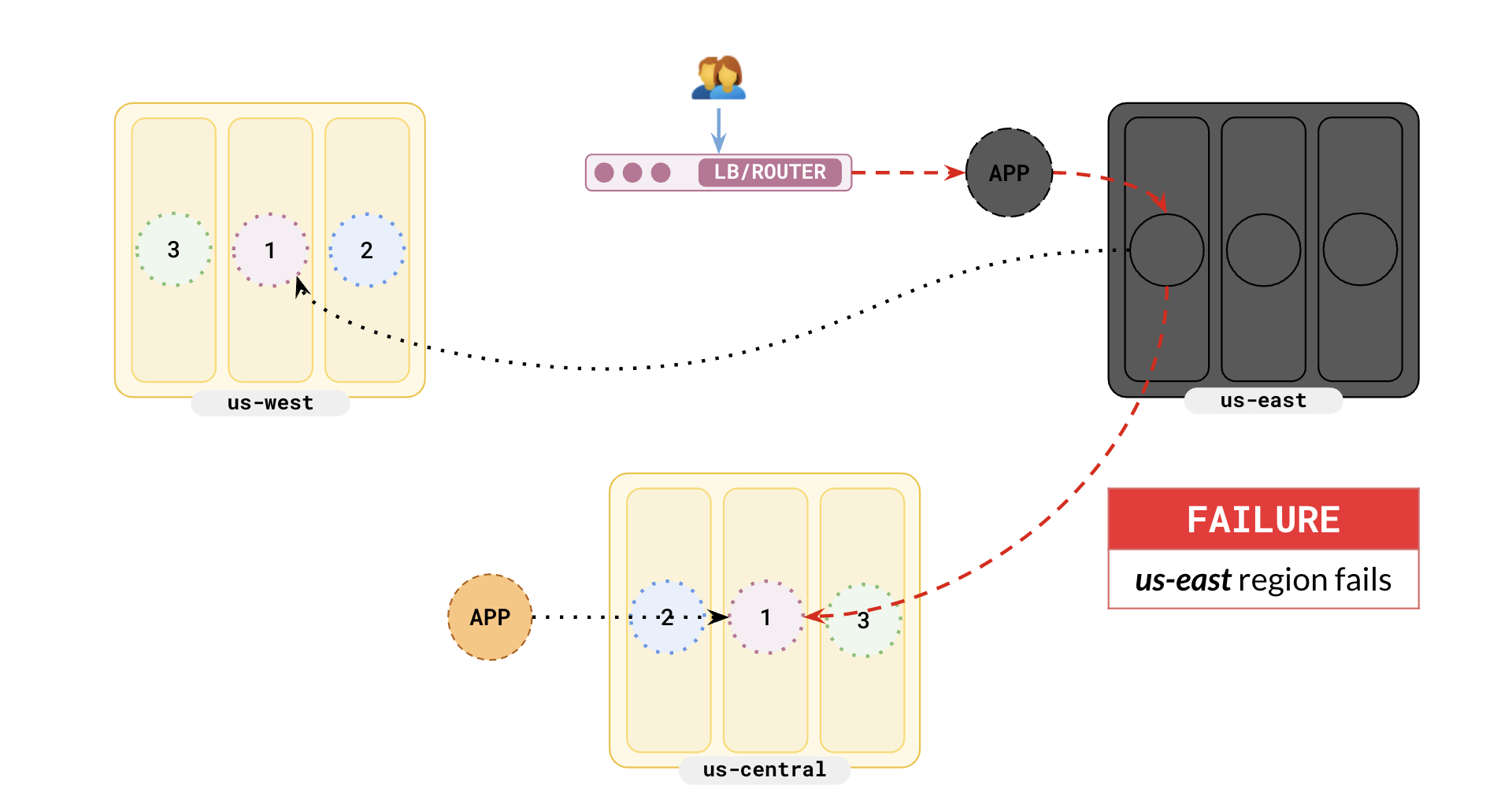

Preferred region failure

When the preferred region fails, there is no data loss but availability will be affected for a short time. This is because all the leaders are located in this region, and your applications are also active in this region. At the moment of the preferred region failure, all tablets will have no leaders.

Simulate failure of the primary region locally

To simulate the failure of the primary region locally, you can just stop the first node.

./bin/yugabyted stop --base_dir=${HOME}/var/node1

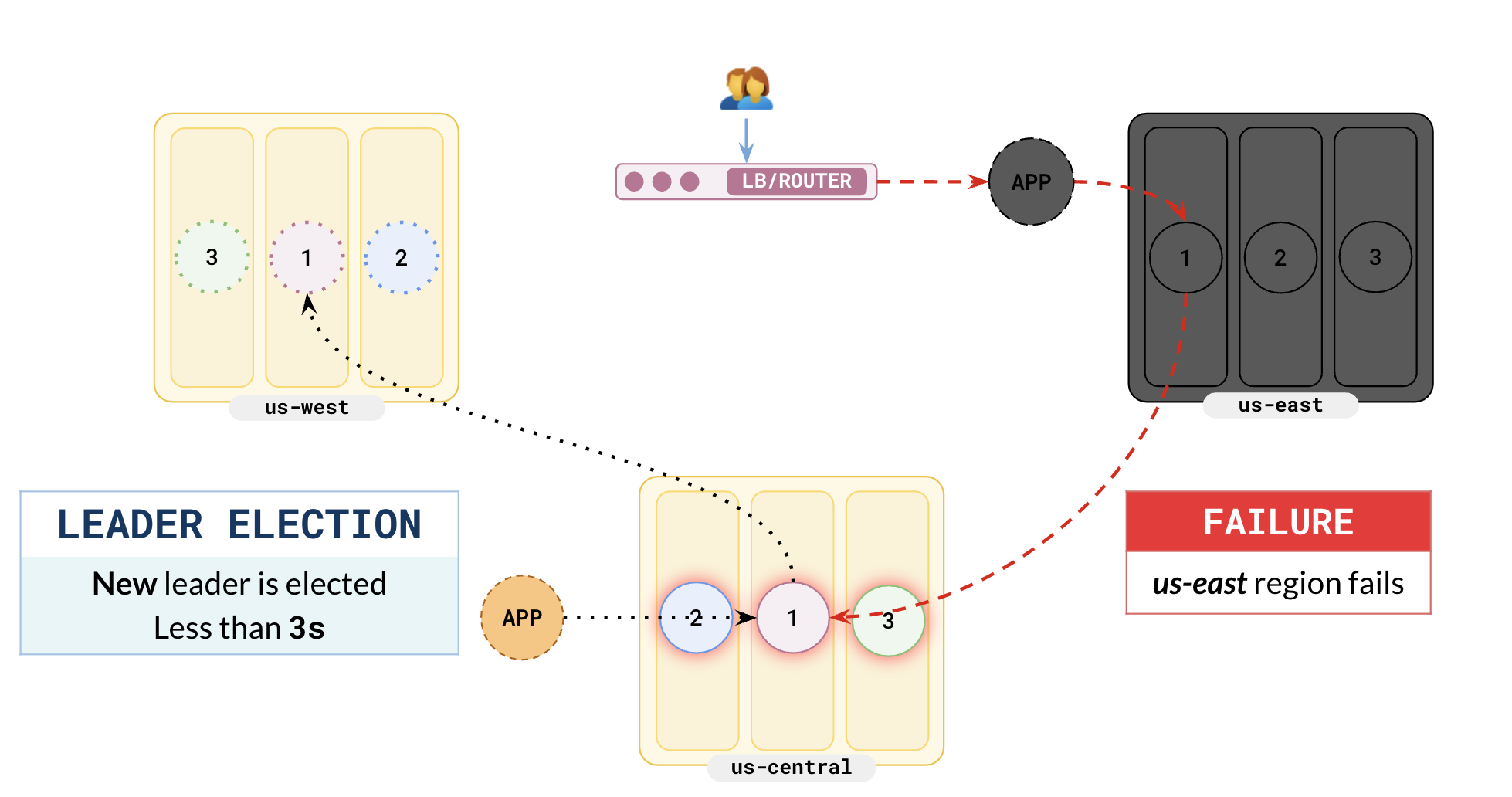

The following illustration shows the failure of the preferred region, us-east.

Because there are no leaders for the tablets, leader election is triggered and the followers in the secondary region will be promoted to leaders. Leader election typically takes about 3 seconds.

The following illustration shows that the followers in us-central have been elected as leaders.

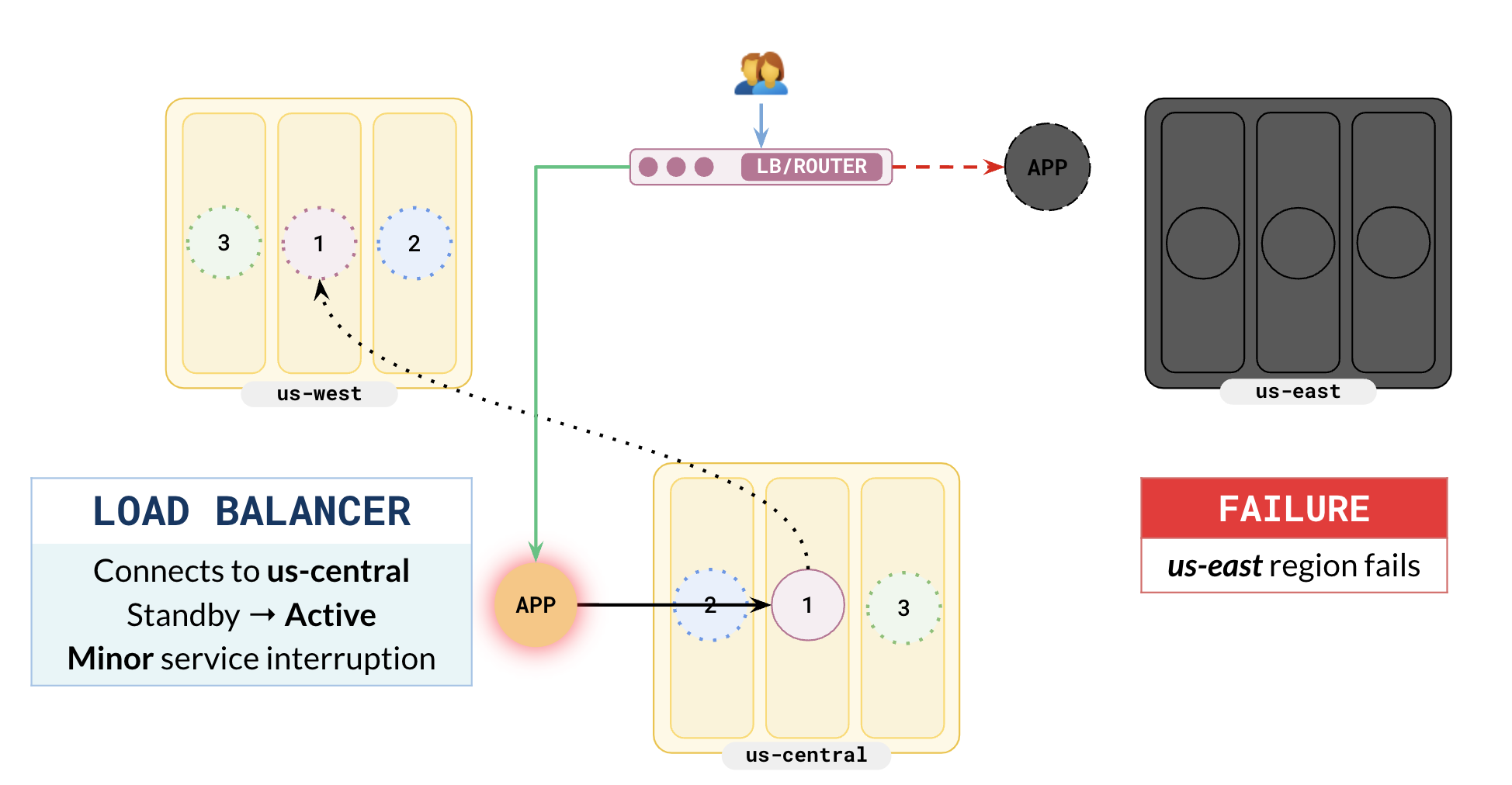

You will now have to fail over your applications. As you have already set up standby applications in us-central, you have to make changes in your load balancer to route the user traffic to the secondary region. The following illustration shows the load balancer routing user requests to the application instance in us-central.

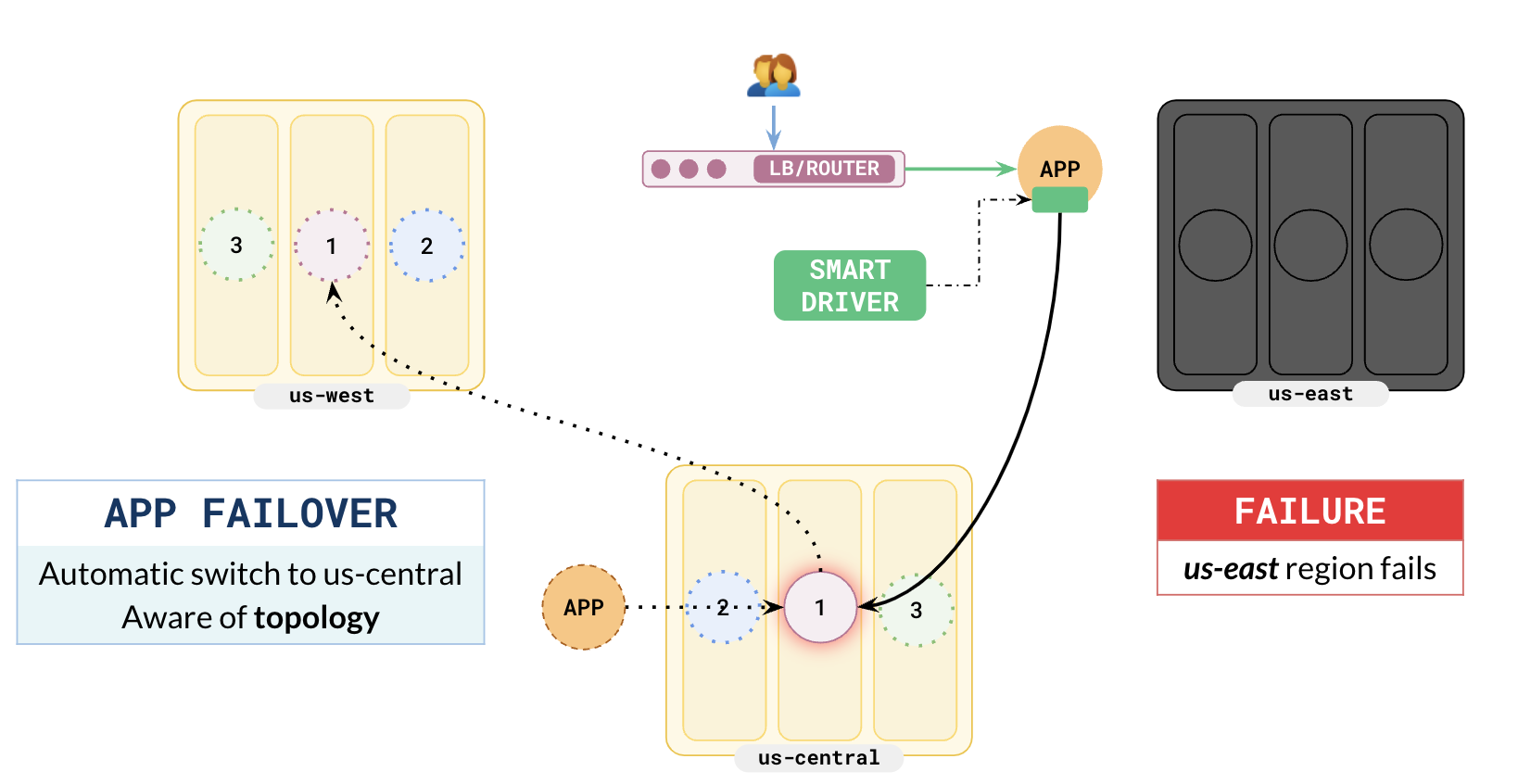

Smart driver

You can opt to use a YugabyteDB Smart Driver in your applications. The major advantage of this driver is that it is aware of the cluster configuration and will automatically route user requests to the secondary region in case the preferred region fails.

The following illustration shows how the primary application (assuming it is still running) in us-east automatically routes the user requests to the secondary region in the case of preferred region failure without any change needed in the load balancer.

Region failure in a two-region setup

Enterprises commonly have one data center as their primary and another data center just for failover. For scenarios where you want to deploy the database in one region, you can deploy YugabyteDB in your primary data center and set up another cluster in the second data center as a backup, updated from the primary cluster using asynchronous replication. This is also known as the 2DC or xCluster model.