Handling node failures

The ability to survive failures and be highly available is one of the foundational features of YugabyteDB. YugabyteDB is resilient to node failures. On the failure of a node, a leader election is triggered for all the tablets that had leaders in the lost node. A follower on a different node is quickly promoted to leader without any loss of data. The entire process take takes approximately 3 seconds.

Suppose you have a universe with a replication factor (RF) of 3, which allows a fault tolerance of 1. This means the universe remains available for both reads and writes even if a fault domain fails. However, if another were to fail (bringing the number of failures to two), writes would become unavailable in order to preserve data consistency.

Set up a universe

Follow the setup instructions to start a single region three-node universe in YugabyteDB Anywhere, connect the YB Workload Simulator application, and run a read-write workload. To verify that the application is running correctly, navigate to the application UI at http://localhost:8080/ to view the universe network diagram, as well as latency and throughput charts for the running workload.

Observe even load across all nodes

You can use YugabyteDB Anywhere to view per-node statistics for the universe, as follows:

-

Navigate to Universes and select your universe.

-

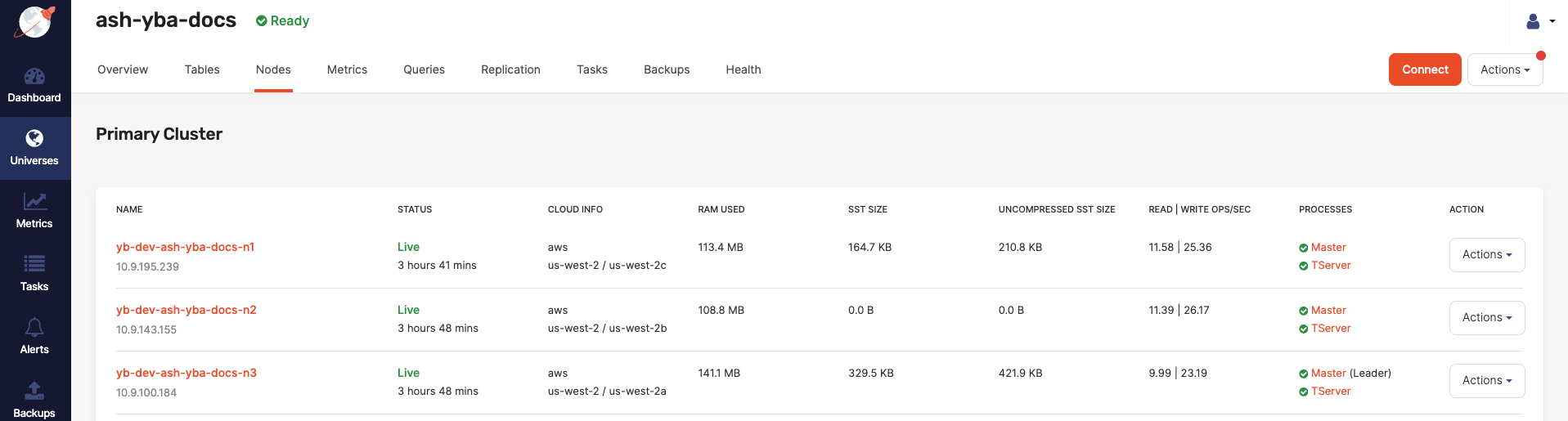

Select Nodes to view the total read and write IOPS per node and other statistics, as shown in the following illustration:

Notice that both the reads and the writes are approximately the same across all nodes, indicating uniform load.

-

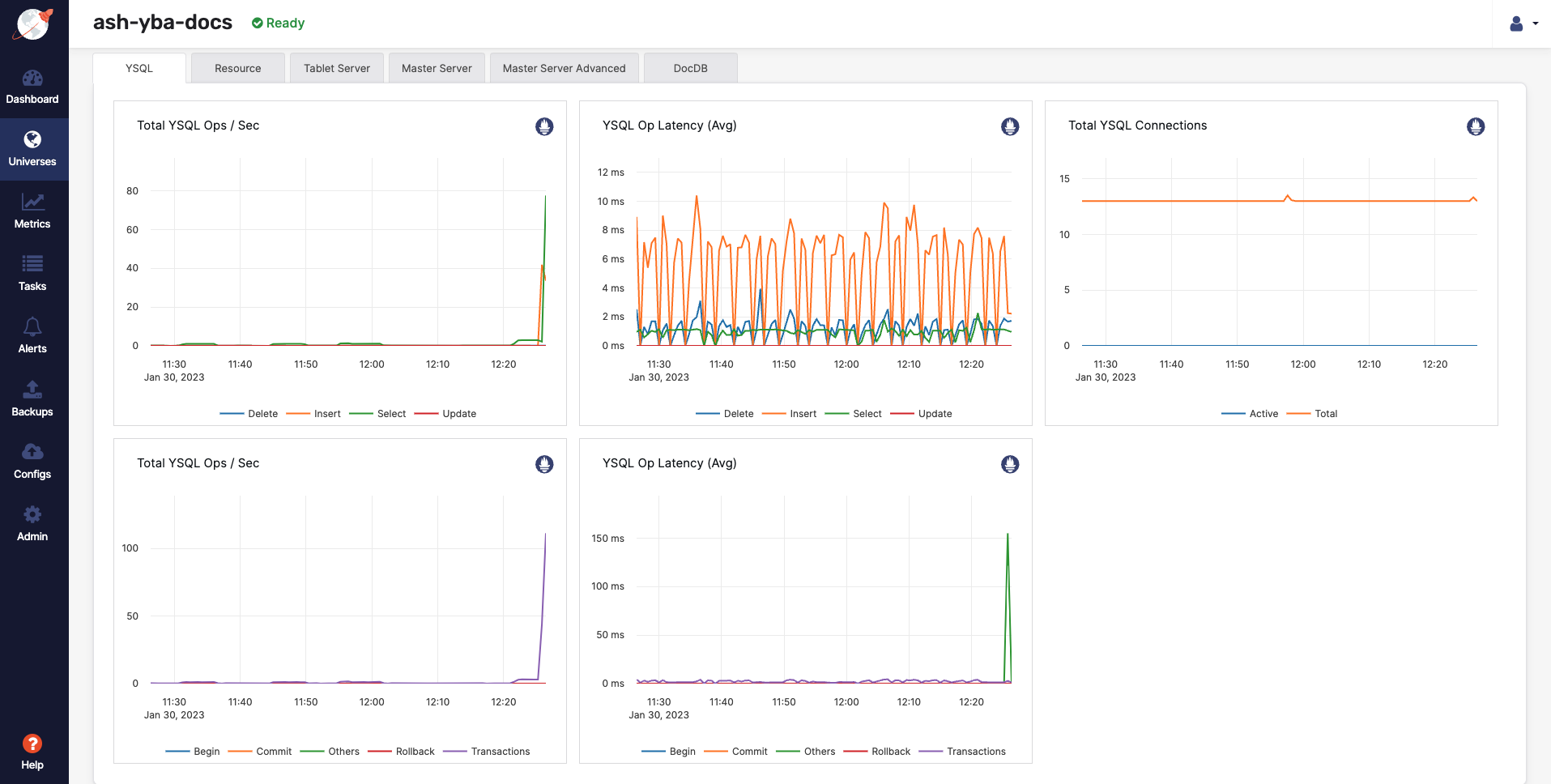

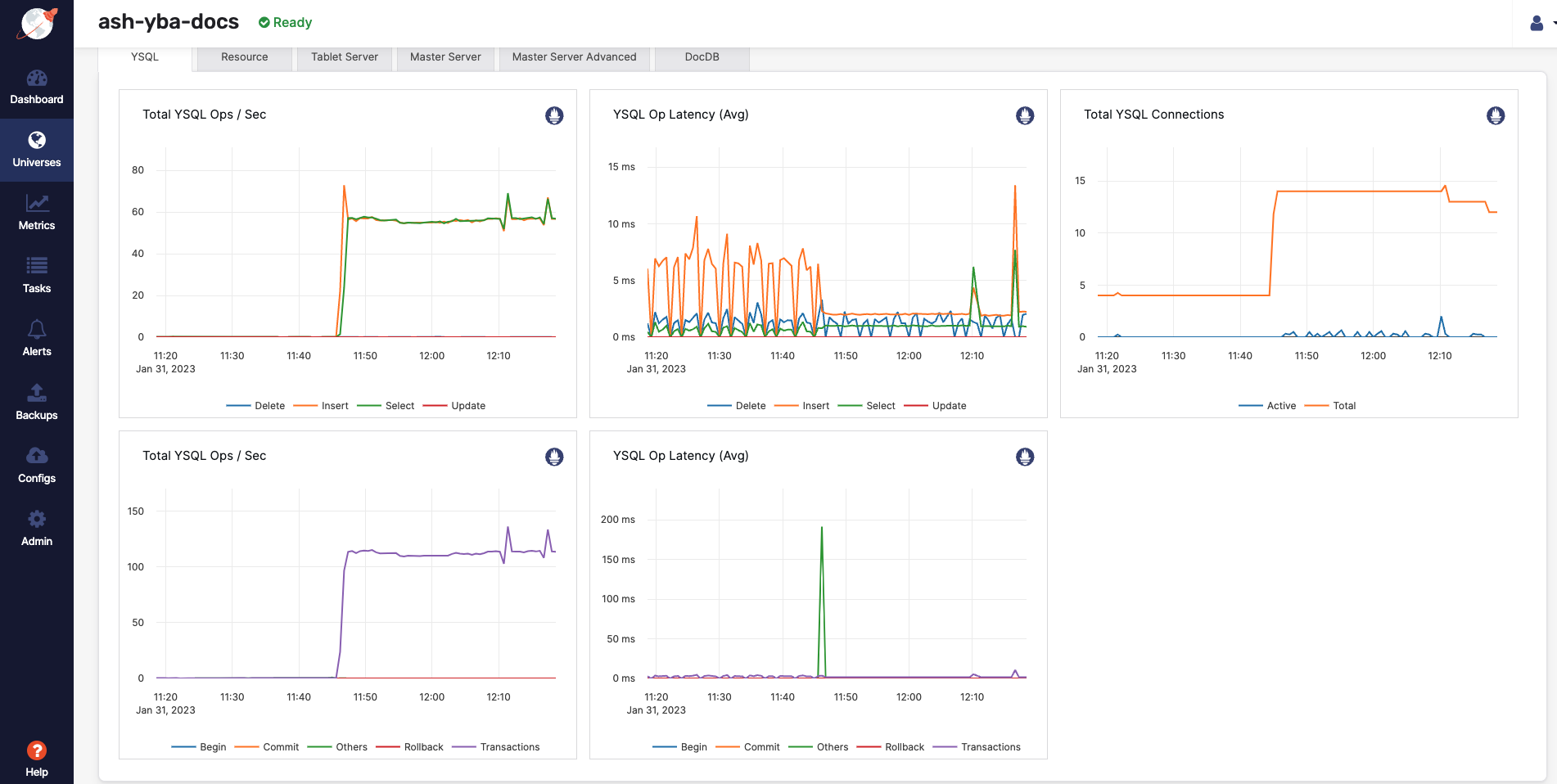

Select Metrics to view charts such as YSQL operations per second and latency, as shown in the following illustration:

-

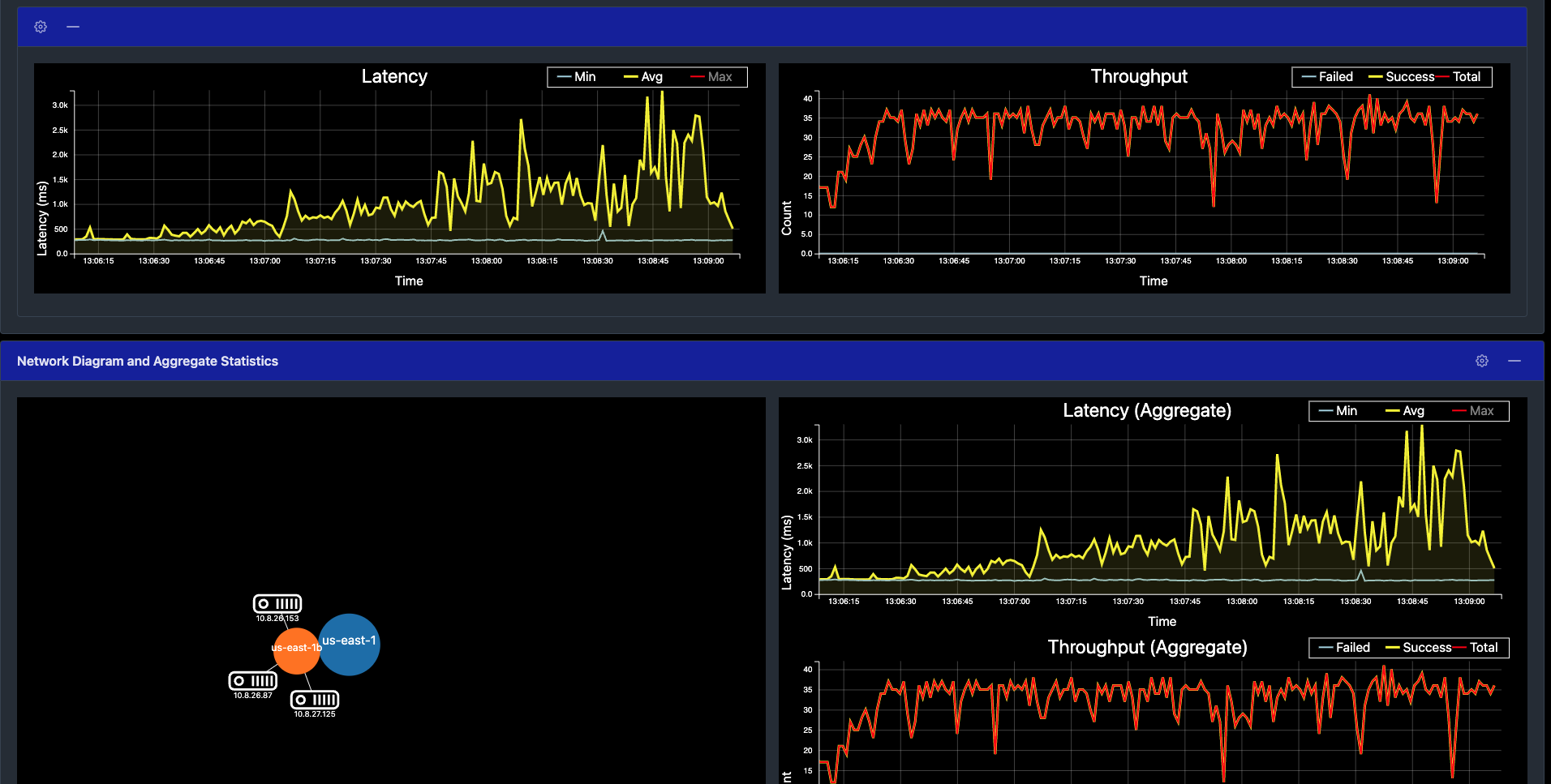

Navigate to the YB Workload Simulator application UI to view the latency and throughput on the universe while the workload is running, as per the following illustration:

Simulate a node failure

You can stop one of the nodes to simulate the loss of a zone, as follows:

-

Navigate to Universes and select your universe.

-

Select Nodes, find the node to be removed, and then click its corresponding Actions > Stop Processes.

Observe workload remains available

-

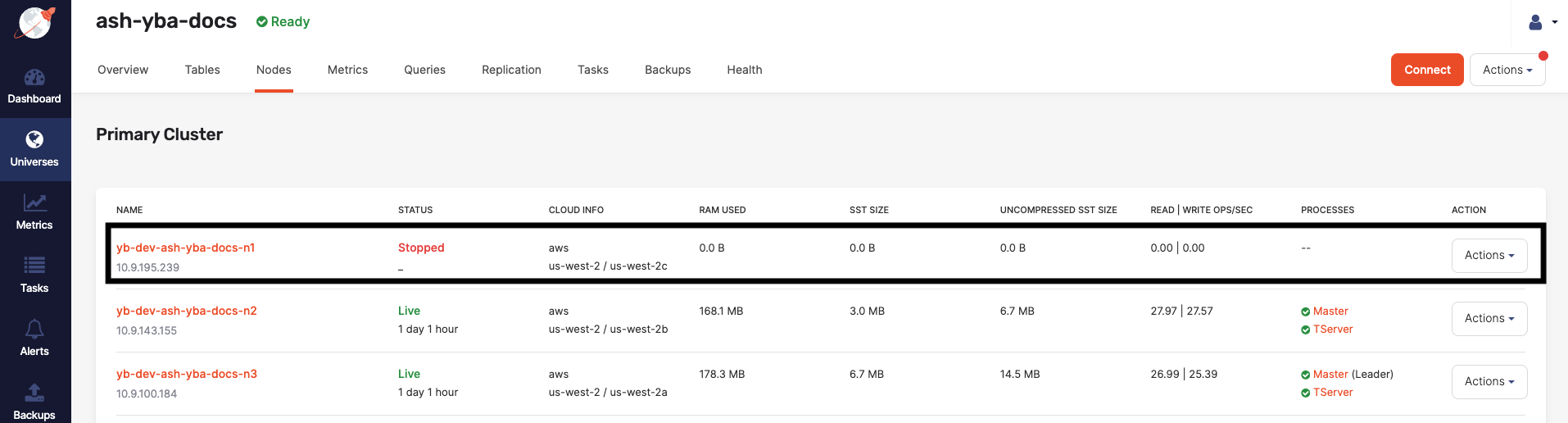

Verify the details by selecting Nodes. Expect to see that the load has been moved off the stopped node and redistributed to the remaining nodes, as shown in the following illustration:

-

Navigate to Metrics to observe a slight spike and drop in the latency and YSQL Ops / Sec charts when the node is stopped, as shown in the following illustration:

Alternatively, you can navigate to the YB Workload Simulator application UI to see the node being removed from the network diagram when it is stopped (it may take a few minutes to display the updated network diagram). Also notice a slight spike and drop in the latency and throughput, both of which resume immediately, as shown in the following illustration:

With the loss of the node, which also represents the loss of an entire fault domain, the universe is now in an under-replicated state.

Despite the loss of an entire fault domain, there is no impact on the application because no data is lost; previously replicated data on the remaining nodes is used to serve application requests.

Clean up

You can shut down the local cluster by following the instructions provided in Destroy a local cluster.